This example is using the aws-exfiltration-protection module.

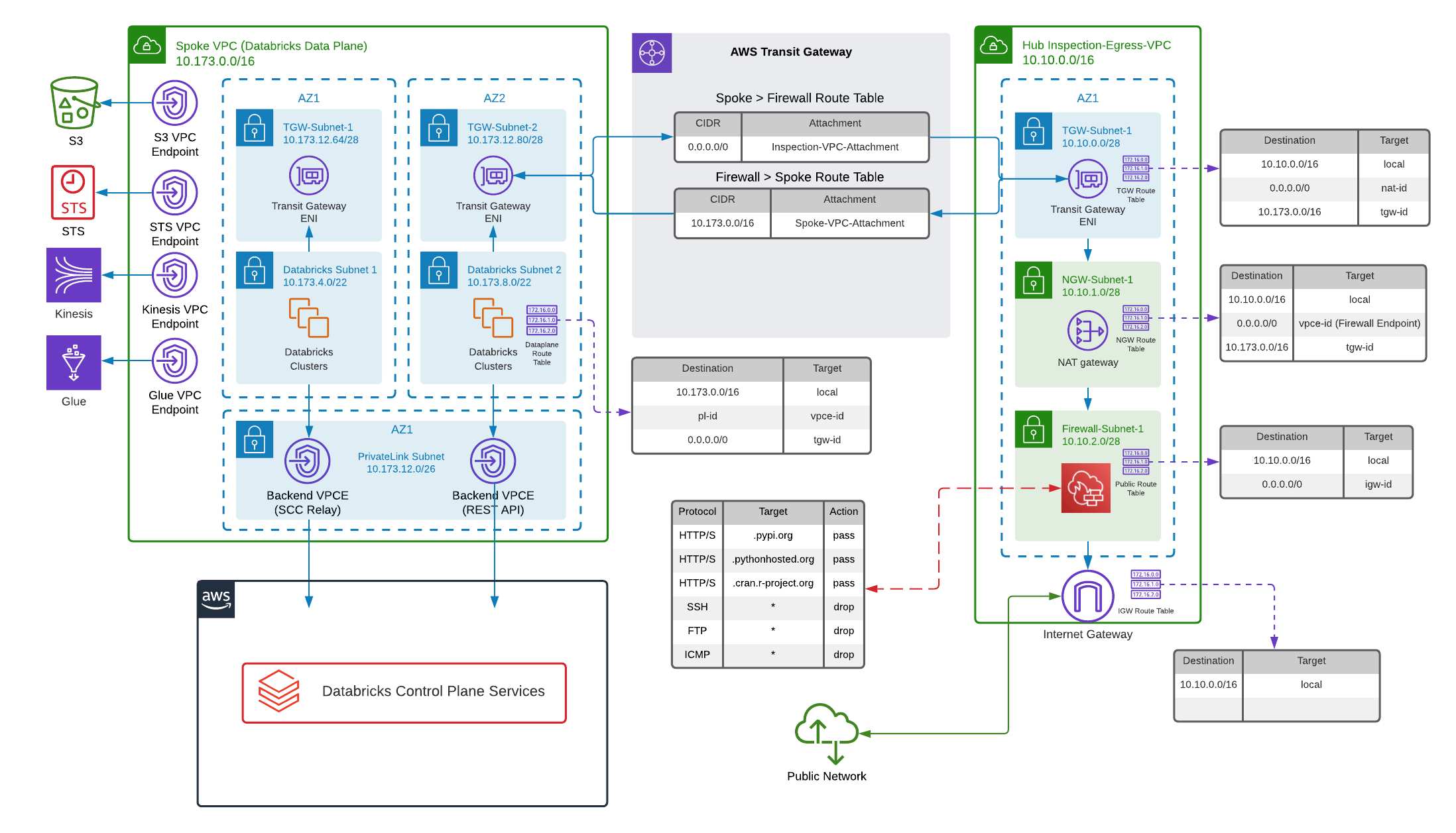

This template provides an example deployment of AWS Databricks E2 workspace with a Hub & Spoke firewall for data exfiltration protection. Details are described in Data Exfiltration Protection With Databricks on AWS.

Note

If you are using AWS Firewall to block most traffic but allow the URLs that Databricks needs to connect to, please update the configuration based on your region. You can get the configuration details for your region from Firewall Appliance document.You can optionally enable Private Link in the variables. Enabling Private link on AWS requires Databricks "Enterprise" tier which is configured at the Databricks account level.

- Reference this module using one of the different module source types

- Add a

variables.tfwith the same content in variables.tf - Add a

terraform.tfvarsfile and provide values to each defined variable - Configure the following environment variables:

- TF_VAR_databricks_account_client_id, set to the value of application ID of your Databricks account-level service principal with admin permission.

- TF_VAR_databricks_account_client_secret, set to the value of the client secret for your Databricks account-level service principal.

- TF_VAR_databricks_account_id, set to the value of the ID of your Databricks account. You can find this value in the corner of your Databricks account console.

- (Optional) Configure your remote backend

- Run

terraform initto initialize terraform and get provider ready. - Run

terraform planto validate and preview the deployment. - Run

terraform applyto create the resources. - Run

terraform output -jsonto print url (host) of the created Databricks workspace.