+

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+ This chapter will explain the core architectural component, its context views, and how HelloDATA works under the hood.

+We separate between two main domains, "Business and Data Domain.

+Resources encapsulated inside a Data Domain can be:

+On top, you can add subsystems. This can be seen as extensions that make HelloDATA pluggable with additional tools. We now support CloudBeaver for viewing your Postgres databases, RtD, and Gitea. You can imagine adding almost infinite tools with capabilities you'd like to have (data catalog, semantic layer, specific BI tool, Jupyter Notebooks, etc.).

+Read more about Business and Data Domain access rights in Roles / Authorization Concept.

+

Zooming into several Data Domains that can exist within a Business domain, we see an example of Data Domain A-C. Each Data Domain has a persistent storage, in our case, Postgres (see more details in the Infrastructure Storage chapter below).

+Each data domain might import different source systems; some might even be used in several data domains, as illustrated. Each Data Domain is meant to have its data model with straightforward to, in the best case, layered data models as shown on the image with:

+Data from various source systems is first loaded into the Landing/Staging Area.

+It must be cleaned before the delivered data is loaded into the Data Processing (Core). Most of these cleaning steps are performed in this area.

+The data from the different source systems are brought together in a central area, the Data Processing (Core), through the Landing and Data Storage and stored there for extended periods, often several years.

+Subsets of the data from the Core are stored in a form suitable for user queries.

+Between the layers, we have lots of Metadata

+Different types of metadata are needed for the smooth operation of the Data Warehouse. Business metadata contains business descriptions of all attributes, drill paths, and aggregation rules for the front-end applications and code designations. Technical metadata describes, for example, data structures, mapping rules, and parameters for ETL control. Operational metadata contains all log tables, error messages, logging of ETL processes, and much more. The metadata forms the infrastructure of a DWH system and is described as "data about data".

+

Within a Data Domain, several users build up different dashboards. Think of a dashboard as a specific use case e.g., Covid, Sales, etc., that solves a particular purpose. Each of these dashboards consists of individual charts and data sources in superset. Ultimately, what you see in the HelloDATA portal are the dashboards that combine all of the sub-components of what Superset provides.

+

As described in the intro. The portal is the heart of the HelloDATA application, with access to all critical applications.

+Entry page of helloDATA: When you enter the portal for the first time, you land on the dashboard where you have

+More technical details are in the "Module deployment view" chapter below.

+

Going one level deeper, we see that we use different modules to make the portal and helloDATA work.

+We have the following modules:

+

At the center are two components, NATS and Keycloak. Keycloak, together with the HelloDATA portal, handles the authentication, authorization, and permission management of HelloDATA components. Keycloak is a powerful open-source identity and access management system. Its primary benefits include:

+On the other hand, NATS is central for handling communication between the different modules. Its power comes from integrating modern distributed systems. It is the glue between microservices, making and processing statements, or stream processing.

+NATS focuses on hyper-connected moving parts and additional data each module generates. It supports location independence and mobility, whether the backend process is streaming or otherwise, and securely handles all of it.

+NATs let you connect mobile frontend or microservice to connect flexibly. There is no need for static 1:1 communication with a hostname, IP, or port. On the other hand, NATS lets you m:n connectivity based on subject instead. Still, you can use 1:1, but on top, you have things like load balancers, logs, system and network security models, proxies, and, most essential for us, sidecars. We use sidecars heavily in connection with NATS.

+NATS can be deployed nearly anywhere: on bare metal, in a VM, as a container, inside K8S, on a device, or in whichever environment you choose. And all fully secure.

+Here is an example of subsystem communication. NATS, obviously at the center, handles these communications between the HelloDATA platform and the subsystems with its workers, as seen in the image below.

+The HelloDATA portal has workers. These workers are deployed as extra containers with sidecars, called "Sidecar Containers". Each module needing communicating needs a sidecar with these workers deployed to communicate with NATS. Therefore, the subsystem itself has its workers to share with NATS as well.

+

Everything starts with a web browser session. The HelloDATA user accesses the HelloDATA Portal through HTTP. Before you see any of your modules or components, you must authorize yourself again, Keycloak. Once logged in, you have a Single Sign-on Token that will give access to different business domains or data domains depending on your role.

+The HelloDATA portal sends an event to the EventWorkers via JDBC to the Portal database. The portal database persists settings from the portal and necessary configurations.

+The EventWorkers, on the other side communicate with the different HelloDATA Modules discussed above (Keycloak, NATS, Data Stack with dbt, Airflow, and Superset) where needed. Each module is part of the domain view, which persists their data within their datastore.

+

In this flow chart, you see again what we discussed above in a different way. Here, we assign a new user role. Again, everything starts with the HelloDATA Portal and an existing session from Keycloak. With that, the portal worker will publish a JSON message via UserRoleEvent to NATS. As the communication hub for HelloDATA, NATS knows what to do with each message and sends it to the respective subsystem worker.

+Subsystem workers will execute that instruction and create and populate roles on, e.g., Superset and Airflow, and once done, inform the spawned subsystem worker that it's done. The worker will push it back to NATS, telling the portal worker, and at the end, will populate a message on the HelloDATA portal.

+

+

+

+

+

+

+

+

+ We'll explain which data stack is behind HelloDATA BE.

+The differentiator of HelloDATA lies in the Portal. It combines all the loosely open-source tools into a single control pane.

+The portal lets you see:

+You can find more about the navigation and the features in the User Manual.

+dbt is a small database toolset that has gained immense popularity and is the facto standard for working with SQL. Why, you might ask? SQL is the most used language besides Python for data engineers, as it is declarative and easy to learn the basics, and many business analysts or people working with Excel or similar tools might know a little already.

+The declarative approach is handy as you only define the what, meaning you determine what columns you want in the SELECT and which table to query in the FROM statement. You can do more advanced things with WHERE, GROUP BY, etc., but you do not need to care about the how. You do not need to watch which database, which partition it is stored, what segment, or what storage. You do not need to know if an index makes sense to use. All of it is handled by the query optimizer of Postgres (or any database supporting SQL).

+But let's face it: SQL also has its downside. If you have worked extensively with SQL, you know the spaghetti code that usually happens when using it. It's an issue because of the repeatability—no variable we can set and reuse in an SQL. If you are familiar with them, you can achieve a better structure with CTEs, which allows you to define specific queries as a block to reuse later. But this is only within one single query and handy if the query is already log.

+But what if you'd like to define your facts and dimensions as a separate query and reuse that in another query? You'd need to decouple the queries from storage, and we would persist it to disk and use that table on disk as a FROM statement for our following query. But what if we change something on the query or even change the name we won't notice in the dependent queries? And we will need to find out which queries depend on each other. There is no lineage or dependency graph.

+It takes a lot of work to be organized with SQL. There is also not a lot of support if you use a database, as they are declarative. You need to make sure how to store them in git or how to run them.

+That's where dbt comes into play. dbt lets you create these dependencies within SQL. You can declaratively build on each query, and you'll get errors if one changes but not the dependent one. You get a lineage graph (see an example), unit tests, and more. It's like you have an assistant that helps you do your job. It's added software engineering practice that we stitch on top of SQL engineering.

+The danger we need to be aware of, as it will be so easy to build your models, is not to make 1000 of 1000 tables. As you will get lots of errors checked by the pre-compiling dbt, good data modeling techniques are essential to succeed.

+Below, you see dbt docs, lineage, and templates: +1. Project Navigation +2. Detail Navigation +3. SQL Template +4. SQL Compiled (practical SQL that gets executed) +5. Full Data lineage where with the source and transformation for the current object

+

Or zoom dbt lineage (when clicked):

+

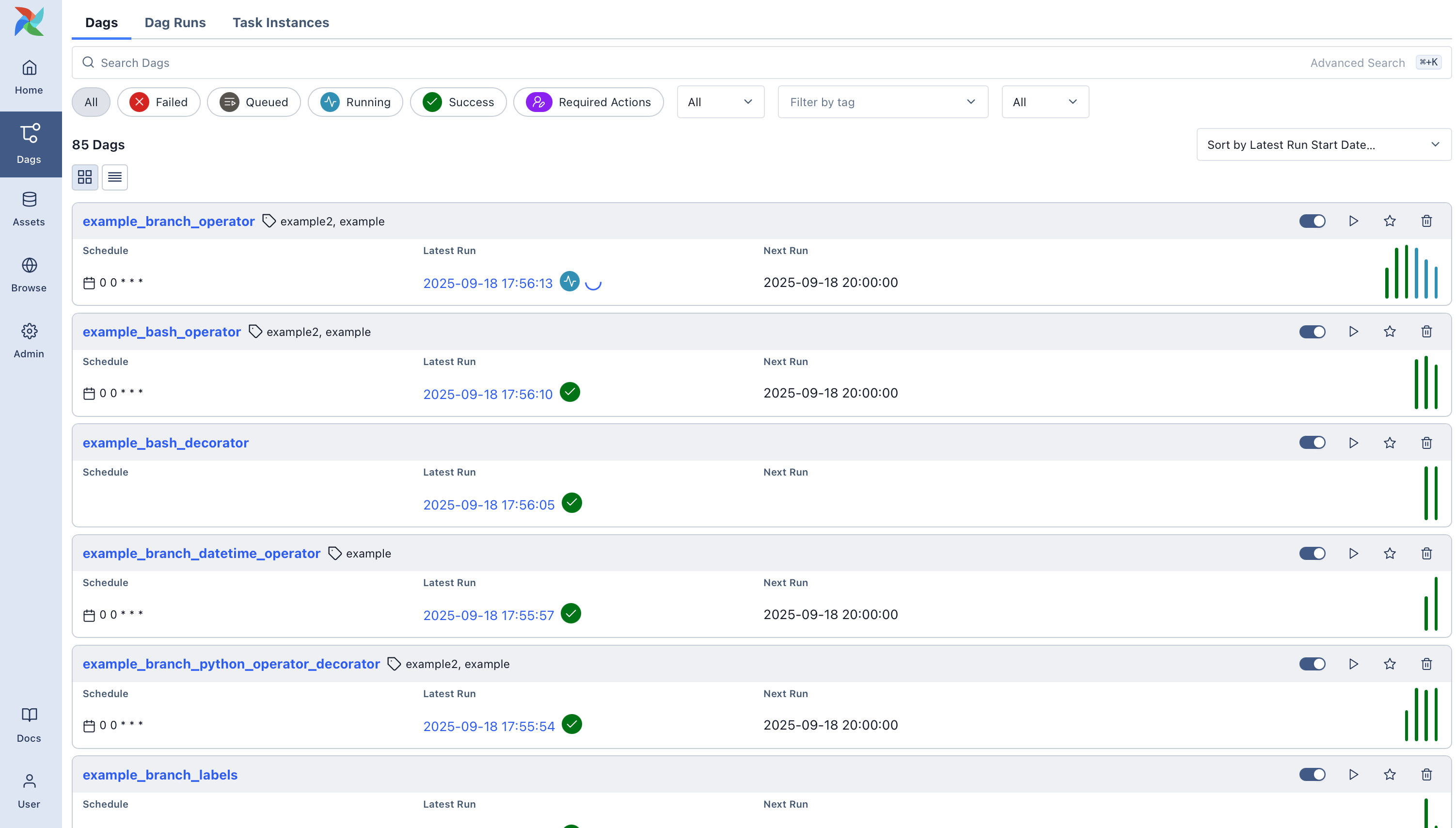

Airflow is the natural next step. If you have many SQLs representing your business metrics, you want them to run on a daily or hourly schedule triggered by events. That's where Airflow comes into play. Airflow is, in its simplest terms, a task or workflow scheduler, which tasks or DAGs (how they are called) can be written programatically with Python. If you know cron jobs, these are the lowest task scheduler in Linux (think * * * * *), but little to no customization beyond simple time scheduling.

Airflow is different. Writing the DAGs in Python allows you to do whatever your business logic requires before or after a particular task is started. In the past, ETL tools like Microsoft SQL Server Integration Services (SSIS) and others were widely used. They were where your data transformation, cleaning and normalisation took place. In more modern architectures, these tools aren’t enough anymore. Moreover, code and data transformation logic are much more valuable to other data-savvy people (data anlysts, data scientists, business analysts) in the company instead of locking them away in a propreitary format.

+Airflow or a general Orchestrator ensures correct execution of depend tasks. It is very flexibile and extensible with operators from the community or in-build capabiliities of the framework itself.

+Airflow DAGs - Entry page which shows you the status of all your DAGs +- what's the schedule of each job +- are they active, how often have they failed, etc.

+Next, you can click on each of the DAGs and get into a detailed view:

+

It shows you the dependencies of your business's various tasks, ensuring that the order is handled correctly.

+

Superset is the entry point to your data. It's a popular open-source business intelligence dashboard tool that visualizes your data according to your needs. It's able to handle all the latest chart types. You can combine them into dashboards filtered and drilled down as expected from a BI tool. The access to dashboards is restricted to authenticated users only. A user can be given view or edit rights to individual dashboards using roles and permissions. Public access to dashboards is not supported.

+

Let's start with the storage layer. We use Postgres, the currently most used and loved database. Postgres is versatile and simple to use. It's a relational database that can be customized and scaled extensively.

+ + + + + + + +

+

+

+

+

+

+

+ Infrastructure is the part where we go into depth about how to run HelloDATA and its components on Kubernetes.

+Kubernetes and its platform allow you to run and orchestrate container workloads. Kubernetes has become popular and is the de-facto standard for your cloud-native apps to (auto-) scale-out and deploy the various open-source tools fast, on any cloud, and locally. This is called cloud-agnostic, as you are not locked into any cloud vendor (Amazon, Microsoft, Google, etc.).

+Kubernetes is infrastructure as code, specifically as YAML, allowing you to version and test your deployment quickly. All the resources in Kubernetes, including Pods, Configurations, Deployments, Volumes, etc., can be expressed in a YAML file using Kubernetes tools like HELM. Developers quickly write applications that run across multiple operating environments. Costs can be reduced by scaling down and using any programming language running with a simple Dockerfile. Its management makes it accessible through its modularity and abstraction; also, with the use of Containers, you can monitor all your applications in one place.

+Kubernetes Namesspaces provides a mechanism for isolating groups of resources within a single cluster. Names of resources need to be unique within a namespace but not across namespaces. Namespace-based scoping is applicable only for namespaced objects (e.g. Deployments, Services, etc) and not for cluster-wide objects (e.g., StorageClass, Nodes, PersistentVolumes, etc).

+

Here, we have a look at the module view with an inside view of accessing the HelloDATA Portal.

+The Portal API serves with SpringBoot, Wildfly and Angular.

+

Following up on how storage is persistent for the Domain View introduced in the above chapters.

+Storage is an important topic, as this is where the business value and the data itself are stored.

+From a Kubernetes and deployment view, everything is encapsulated inside a Namespace. As explained in the above "Domain View", we have different layers from one Business domain (here Business Domain) to n (multiple) Data Domains.

+Each domain holds its data on persistent storage, whether Postgres for relational databases, blob storage for files or file storage on persistent volumes within Kubernetes.

+GitSync is a tool we added to allow GitOps-type deployment. As a user, you can push changes to your git repo, and GitSync will automatically deploy that into your cluster on Kubernetes.

+

Here is another view that persistent storage within Kubernetes (K8s) can hold data across the Data Domain. If these persistent volumes are used to store Data Domain information, it will also require implementing a backup and restore plan for these data.

+Alternatively, blob storage on any cloud vendor or services such as Postgres service can be used, as these are typically managed and come with features such as backup and restore.

+

HelloDATA uses Kubernetes jobs to perform certain activities

+Contents:

+

HelloDATA can be operated as different platforms, e.g. development, test, and/or production platforms. The deployment is based on common CICD principles. It uses GIT and flux internally to deploy its resources onto the specific Kubernetes clusters. +In case of resource shortages, the underlying platform can be extended with additional resources upon request. +Horizontal scaling of the infrastructure can be done within the given resources boundaries (e. g. multiple pods for Superset.)

+See at Roles and authorization concept.

+ + + + + + + +

+

+

+

+

+

+

+ It's the demo cases of HD-BE, it's importing animal data from an external source and loading them with Airflow, modeled with dbt, and visualized in Superset.

+It hopefully will show you how the platform works and it comes pre-installed with the docker-compose installation.

+Click on the data-domain showcase and you can explore pre-defined dashboards with below described Airflow job and dbt models.

Below the technical details of the showcase are described. How the airflow pipeline is collecting the data from an open API and modeling it with dbt.

+

The fact table fact_breeds_long describes key figures, which are used to derive the stock of registered, living animals, divided by breeds over time.

+The following tables from the [lzn] database schema are selected for the calculation of the key figure:

+

The fact table fact_catle_beefiness_fattissue describes key figures, which are used to derive the number of slaughtered cows by year and month.

+Classification is done according to CH-TAX (Trading Class Classification CHTAX System | VIEGUT AG)

The following tables from the [lzn] database schema are selected for the calculation of the key figure:

+

The fact table fact_cattle_popvariations describes key figures, which are used to derive the increase and decrease of the cattle population in the Animal Traffic

+Database (https://www.agate.ch/) over time (including reports from Liechtenstein).

+The key figures are grouped according to the following types of reports:

The following table from the [lzn] database schema is selected for the calculation of the key figure:

+

The fact table fact_cattle_popvariations describes key figures, which are used to derive the distribution of registered living cattle by age class and gender.

+The following table from the [lzn] database schema is selected for the calculation of the key figure:

+The fact table fact_cattle_pyr_long pivots all key figures from fact_cattle_pyr_wide.

+

The data foundation of the Superset visualizations in the form of Datasets, Dashboards, and Charts is realized through a Database Connection.

+In this case, a database connection to a database is established, which refers to a PostgreSQL database in which the above-described DBT scripts were executed.

+Datasets are used to prepare the data foundation in a suitable form, which can then be visualized in charts in an appropriate way.

+Essentially, modeled fact tables from the UDM database schema are selected and linked with dimension tables.

+This allows facts to be calculated or evaluated at different levels of professional granularity.

+

| Source | +Description | +

|---|---|

| https://tierstatistik.identitas.ch/de/ | +Website of the API provider | +

| https://tierstatistik.identitas.ch/de/docs.html | +Documentation of the platform and description of the data basis and API | +

| tierstatistik.identitas.ch/tierstatistik.rdf | +API and data provided by the website | +

+

+

+

+

+

+

+

+ If you haven't turned on Kubernetes, you'll get an error similar to this:

+urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='kubernetes.docker.internal', port=6443): Max retries exceeded with url: /api/v1/namespaces/default/pods?labelSelector=dag_id%3Drun_boiler_example%2Ckubernetes_pod_operator%3DTrue%2Cpod-label-test%3Dlabel-name-test%2Crun_id%3Dmanual__2024-01-29T095915.2491840000-f3be8d87f%2Ctask_id%3Drun_duckdb_query%2Calready_checked%21%3DTrue%2C%21airflow-worker (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0xffff82c2ab10>: Failed to establish a new connection: [Errno 111] Connection refused'))

Full log: +

[2024-01-29, 09:48:49 UTC] {pod.py:1017} ERROR - 'NoneType' object has no attribute 'metadata'

+Traceback (most recent call last):

+ File "/usr/local/lib/python3.11/site-packages/urllib3/connection.py", line 174, in _new_conn

+ conn = connection.create_connection(

+ ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

+ File "/usr/local/lib/python3.11/site-packages/urllib3/util/connection.py", line 95, in create_connection

+ raise err

+ File "/usr/local/lib/python3.11/site-packages/urllib3/util/connection.py", line 85, in create_connection

+ sock.connect(sa)

+ConnectionRefusedError: [Errno 111] Connection refused

+During handling of the above exception, another exception occurred:

+Traceback (most recent call last):

+ File "/usr/local/lib/python3.11/site-packages/urllib3/connectionpool.py", line 714, in urlopen

+ httplib_response = self._make_request(

+ ^^^^^^^^^^^^^^^^^^^

+ File "/usr/local/lib/python3.11/site-packages/urllib3/connectionpool.py", line 403, in _make_request

+ self._validate_conn(conn)

+ File "/usr/local/lib/python3.11/site-packages/urllib3/connectionpool.py", line 1053, in _validate_conn

+ conn.connect()

+ File "/usr/local/lib/python3.11/site-packages/urllib3/connection.py", line 363, in connect

+ self.sock = conn = self._new_conn()

+ ^^^^^^^^^^^^^^^^

+ File "/usr/local/lib/python3.11/site-packages/urllib3/connection.py", line 186, in _new_conn

+ raise NewConnectionError(

+urllib3.exceptions.NewConnectionError: <urllib3.connection.HTTPSConnection object at 0xffff82db3650>: Failed to establish a new connection: [Errno 111] Connection refused

+During handling of the above exception, another exception occurred:

+Traceback (most recent call last):

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 583, in execute_sync

+ self.pod = self.get_or_create_pod( # must set `self.pod` for `on_kill`

+ ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 545, in get_or_create_pod

+ pod = self.find_pod(self.namespace or pod_request_obj.metadata.namespace, context=context)

+

+....

+

+

+airflow.exceptions.AirflowException: Pod airflow-running-dagster-workspace-jdkqug7h returned a failure.

+remote_pod: None

+[2024-01-29, 09:48:49 UTC] {taskinstance.py:1398} INFO - Marking task as UP_FOR_RETRY. dag_id=run_boiler_example, task_id=run_duckdb_query, execution_date=20210501T000000, start_date=20240129T094849, end_date=20240129T094849

+[2024-01-29, 09:48:49 UTC] {standard_task_runner.py:104} ERROR - Failed to execute job 3 for task run_duckdb_query (Pod airflow-running-dagster-workspace-jdkqug7h returned a failure.

+remote_pod: None; 225)

+[2024-01-29, 09:48:49 UTC] {local_task_job_runner.py:228} INFO - Task exited with return code 1

+[2024-01-29, 09:48:49 UTC] {taskinstance.py:2776} INFO - 0 downstream tasks scheduled from follow-on schedule check

+If your name or image is not available locally (check docker image ls), you'll get an error on Airflow like this:

[2024-01-29, 10:10:14 UTC] {pod.py:961} INFO - Building pod airflow-running-dagster-workspace-64ngbudj with labels: {'dag_id': 'run_boiler_example', 'task_id': 'run_duckdb_query', 'run_id': 'manual__2024-01-29T101013.7029880000-328a76b5e', 'kubernetes_pod_operator': 'True', 'try_number': '1'}

+[2024-01-29, 10:10:14 UTC] {pod.py:538} INFO - Found matching pod airflow-running-dagster-workspace-64ngbudj with labels {'airflow_kpo_in_cluster': 'False', 'airflow_version': '2.7.1-astro.1', 'dag_id': 'run_boiler_example', 'kubernetes_pod_operator': 'True', 'pod-label-test': 'label-name-test', 'run_id': 'manual__2024-01-29T101013.7029880000-328a76b5e', 'task_id': 'run_duckdb_query', 'try_number': '1'}

+[2024-01-29, 10:10:14 UTC] {pod.py:539} INFO - `try_number` of task_instance: 1

+[2024-01-29, 10:10:14 UTC] {pod.py:540} INFO - `try_number` of pod: 1

+[2024-01-29, 10:10:14 UTC] {pod_manager.py:348} WARNING - Pod not yet started: airflow-running-dagster-workspace-64ngbudj

+[2024-01-29, 10:10:15 UTC] {pod_manager.py:348} WARNING - Pod not yet started: airflow-running-dagster-workspace-64ngbudj

+[2024-01-29, 10:10:16 UTC] {pod_manager.py:348} WARNING - Pod not yet started: airflow-running-dagster-workspace-64ngbudj

+[2024-01-29, 10:10:17 UTC] {pod_manager.py:348} WARNING - Pod not yet started: airflow-running-dagster-workspace-64ngbudj

+[2024-01-29, 10:10:18 UTC] {pod_manager.py:348} WARNING - Pod not yet started: airflow-running-dagster-workspace-64ngbudj

+[2024-01-29, 10:12:15 UTC] {pod.py:823} INFO - Deleting pod: airflow-running-dagster-workspace-64ngbudj

+[2024-01-29, 10:12:15 UTC] {taskinstance.py:1935} ERROR - Task failed with exception

+Traceback (most recent call last):

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 594, in execute_sync

+ self.await_pod_start(pod=self.pod)

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 556, in await_pod_start

+ self.pod_manager.await_pod_start(pod=pod, startup_timeout=self.startup_timeout_seconds)

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/utils/pod_manager.py", line 354, in await_pod_start

+ raise PodLaunchFailedException(msg)

+airflow.providers.cncf.kubernetes.utils.pod_manager.PodLaunchFailedException: Pod took longer than 120 seconds to start. Check the pod events in kubernetes to determine why.

+During handling of the above exception, another exception occurred:

+Traceback (most recent call last):

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 578, in execute

+ return self.execute_sync(context)

+ ^^^^^^^^^^^^^^^^^^^^^^^^^^

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 617, in execute_sync

+ self.cleanup(

+ File "/usr/local/lib/python3.11/site-packages/airflow/providers/cncf/kubernetes/operators/pod.py", line 746, in cleanup

+ raise AirflowException(

+airflow.exceptions.AirflowException: Pod airflow-running-dagster-workspace-64ngbudj returned a failure.

+

+

+...

+

+[2024-01-29, 10:12:15 UTC] {local_task_job_runner.py:228} INFO - Task exited with return code 1

+[2024-01-29, 10:12:15 UTC] {taskinstance.py:2776} INFO - 0 downstream tasks scheduled from follow-on schedule check

+If you open a kubernetes Monitoring tool such as Lens or k9s, you'll also see the pod struggling to pull the image:

+

Another cause, in case you haven't created the local PersistentVolume, you'd see something like "my-pvc" does not exist. Then you'd need to create the pvc first.

+ + + + + + + +

+

+

+

+

+

+

+ On this page, we'll explain what workspaces in the context of HelloDATA-BE are and how to use them, and you'll create your own based on a prepared starter repo.

+Info

+Also see the step-by-step video we created that might help you further.

+Within the context of HelloDATA-BE, data, engineers, or technical people can develop their dbt, airflow, or even bring their tool, all packed into a separate git-repo and run as part of HelloDATA-BE where they enjoy the benefits of persistent storage, visualization tools, user management, monitoring, etc.

+graph TD

+ subgraph "Business Domain (Tenant)"

+ BD[Business Domain]

+ BD -->|Services| SR1[Portal]

+ BD -->|Services| SR2[Orchestration]

+ BD -->|Services| SR3[Lineage]

+ BD -->|Services| SR5[Database Manager]

+ BD -->|Services| SR4[Monitoring & Logging]

+ end

+ subgraph "Workspaces"

+ WS[Workspaces] -->|git-repo| DE[Data Engineering]

+ WS[Workspaces] -->|git-repo| ML[ML Team]

+ WS[Workspaces] -->|git-repo| DA[Product Analysts]

+ WS[Workspaces] -->|git-repo| NN[...]

+ end

+ subgraph "Data Domain (1-n)"

+ DD[Data Domain] -->|Persistent Storage| PG[Postgres]

+ DD[Data Domain] -->|Data Modeling| DBT[dbt]

+ DD[Data Domain] -->|Visualization| SU[Superset]

+ end

+

+ BD -->|Contains 1-n| DD

+ DD -->|n-instances| WS

+

+ %% Colors

+ class BD business

+ class DD data

+ class WS workspace

+ class SS,PGA subsystem

+ class SR1,SR2,SR3,SR4 services

+

+ classDef business fill:#96CD70,stroke:#333,stroke-width:2px;

+ classDef data fill:#A898D8,stroke:#333,stroke-width:2px;

+ classDef workspace fill:#70AFFD,stroke:#333,stroke-width:2px;

+ %% classDef subsystem fill:#F1C40F,stroke:#333,stroke-width:2px;

+ %% classDef services fill:#E74C3C,stroke:#333,stroke-width:1px;A workspace can have n-instances within a data domain. What does it mean? Each team can deal with its requirements to develop and build their project independently.

+Think of an ML engineer who needs heavy tools such as Tensorflow, etc., as an analyst might build simple dbt models. In contrast, another data engineer uses a specific tool from the Modern Data Stack.

+Workspaces are best used for development, implementing custom business logic, and modeling your data. But there is no limit to what you build as long as it can be run as a DAG as an Airflow data pipeline.

+Generally speaking, a workspace is used whenever someone needs to create a custom logic yet to be integrated within the HelloDATA BE Platform.

+As a second step - imagine you implemented a critical business transformation everyone needs - that code and DAG could be moved and be a default DAG within a data domain. But the development always happens within the workspace, enabling self-serve.

+Without workspaces, every request would need to go over the HelloDATA BE Project team. Data engineers need a straightforward way isolated from deployment where they can add custom code for their specific data domain pipelines.

+When you create your workspace, it will be deployed within HelloDATA-BE and run by an Airflow DAG. The Airflow DAG is the integration into HD. You'll define things like how often it runs, what it should run, the order of it, etc.

+Below, you see an example of two different Airflow DAGs deployed from two different Workspaces (marked red arrow):

+

To implement your own Workspace, we created a hellodata-be-workspace-starter. This repo contains a minimal set of artefacts in order to be deployed on HD.

+Settings -> Kubernetes -> Enable Kubernetesdocker build -t hellodata-ws-boilerplate:0.1.0-a.1 . (or the name of choice)From now on whenever you have a change, you just build a new image and that will be deployed on HelloDATA-BE automatically. Making you and your team independent.

+Below you find an example structure that help you understand how to configure workspaces for your needs.

+The repo helps you to build your workspace by simply clone the whole repo and adding your changes.

+We generally have these boiler plate files: +

├── Dockerfile

+├── Makefile

+├── README.md

+├── build-and-push.sh

+├── deployment

+│ └── deployment-needs.yaml

+└── src

+ ├── dags

+ │ └── airflow

+ │ ├── .astro

+ │ │ ├── config.yaml

+ │ ├── Dockerfile

+ │ ├── Makefile

+ │ ├── README.md

+ │ ├── airflow_settings.yaml

+ │ ├── dags

+ │ │ ├── .airflowignore

+ │ │ └── boiler-example.py

+ │ ├── include

+ │ │ └── .kube

+ │ │ └── config

+ │ ├── packages.txt

+ │ ├── plugins

+ │ ├── requirements.txt

+ └── duckdb

+ └── query_duckdb.py

+Where as query_duckdb.py and the boiler-example.py DAG are in this case are my custom code that you'd change with your own code.

Although the Airflow DAG can be re-used as we use KubernetesPodOperator that works works within HD and locally (check more below). Essentially you change the name and the schedule to your needs, the image name and your good to go.

Example of a Airflow DAG: +

from pendulum import datetime

+from airflow import DAG

+from airflow.configuration import conf

+from airflow.providers.cncf.kubernetes.operators.kubernetes_pod import (

+ KubernetesPodOperator,

+)

+from kubernetes.client import models as k8s

+import os

+

+default_args = {

+ "owner": "airflow",

+ "depend_on_past": False,

+ "start_date": datetime(2021, 5, 1),

+ "email_on_failure": False,

+ "email_on_retry": False,

+ "retries": 1,

+}

+

+workspace_name = os.getenv("HD_WS_BOILERPLATE_NAME", "ws-boilerplate")

+namespace = os.getenv("HD_NAMESPACE", "default")

+

+# This will use .kube/config for local Astro CLI Airflow and ENV variable for k8s deployment

+if namespace == "default":

+ config_file = "include/.kube/config" # copy your local kube file to the include folder: `cp ~/.kube/config include/.kube/config`

+ in_cluster = False

+else:

+ in_cluster = True

+ config_file = None

+

+with DAG(

+ dag_id="run_boiler_example",

+ schedule="@once",

+ default_args=default_args,

+ description="Boiler Plate for running a hello data workspace in airflow",

+ tags=[workspace_name],

+) as dag:

+ KubernetesPodOperator(

+ namespace=namespace,

+ image="my-docker-registry.com/hellodata-ws-boilerplate:0.1.0",

+ image_pull_secrets=[k8s.V1LocalObjectReference("regcred")],

+ labels={"pod-label-test": "label-name-test"},

+ name="airflow-running-dagster-workspace",

+ task_id="run_duckdb_query",

+ in_cluster=in_cluster, # if set to true, will look in the cluster, if false, looks for file

+ cluster_context="docker-desktop", # is ignored when in_cluster is set to True

+ config_file=config_file,

+ is_delete_operator_pod=True,

+ get_logs=True,

+ # please add/overwrite your command here

+ cmds=["/bin/bash", "-cx"],

+ arguments=[

+ "python query_duckdb.py && echo 'Query executed successfully'", # add your command here

+ ],

+ )

+To run locally, the easiest way is to use the Astro CLI (see link for installation). With it, we can simply astro start or astro stop to start up/down.

For local deployment we have these requirements:

+cp ~/.kube/config src/dags/airflow/include/.kube/C:\Users\[YourIdHere]\.kube\config docker build must have run (check with docker image lsThe config file is used from astro to run on local Kubernetes. Se more infos on Run your Astro project in a local Airflow environment.

DockerfileBelow is the example how to install requirements (here duckdb) and copy my custom code src/duckdb/query_duckdb.py to the image.

Boiler-plate example: +

FROM python:3.10-slim

+

+RUN mkdir -p /opt/airflow/airflow_home/dags/

+

+# Copy your airflow DAGs which will be copied into bussiness domain Airflow (These DAGs will be executed by Airflow)

+COPY ../src/dags/airflow/dags/* /opt/airflow/airflow_home/dags/

+

+WORKDIR /usr/src/app

+

+RUN pip install --upgrade pip

+

+# Install DuckDB (example - please add your own dependencies here)

+RUN pip install duckdb

+

+# Copy the script into the container

+COPY src/duckdb/query_duckdb.py ./

+

+# long-running process to keep the container running

+CMD tail -f /dev/null

+deployment-needs.yamlBelow you see an an example of a deployment needs in deployment-needs.yaml, that defines:

This part is the one that will change most likely

+All of which will be eventually more automated. Also let us know or just add missing specs to the file and we'll add the functionallity on the deployment side.

+spec:

+ initContainers:

+ copy-dags-to-bd:

+ image:

+ repository: my-docker-registry.com/hellodata-ws-boilerplate

+ pullPolicy: IfNotPresent

+ tag: "0.1.0"

+ resources: {}

+

+ volumeMounts:

+ - name: storage-hellodata

+ type: external

+ path: /storage

+ command: [ "/bin/sh","-c" ]

+ args: [ "mkdir -p /storage/${datadomain}/dags/${workspace}/ && rm -rf /storage/${datadomain}/dags/${workspace}/* && cp -a /opt/airflow/airflow_home/dags/*.py /storage/${datadomain}/dags/${workspace}/" ]

+

+ containers:

+ - name: ws-boilerplate

+ image: my-docker-registry.com/hellodata-ws-boilerplate:0.1.0

+ imagePullPolicy: Always

+

+

+#needed envs for Airflow

+airflow:

+

+ extraEnv: |

+ - name: "HD_NAMESPACE"

+ value: "${namespace}"

+ - name: "HD_WS_BOILERPLATE_NAME"

+ value: "dd01-ws-boilerplate"

+We've added another demo dag called showcase-boiler.py which is an DAG that download data from the web (animal statistics, ~150 CSVs), postgres tables are created, data inserted and a dbt run and docs is ran at the end.

In this case we use multiple task in a DAG, these have all the same image, but you could use different one for each step. Meaning you could use Python for download, R for transformatin and Java for machine learning. But as long as images are similar, I'd suggest to use the same image.

+Another addition is the use of voulmes. These are a persistent storage also called pvs in Kubernetes, which allow to store intermediate storage outside of the container. Downloaded CSVs are stored there for the next task to pick up from that storage.

Locally you need to create such a storage once, there is a script in case you want to apply it to you local Docker-Desktop setup. Run this command: +

+Be sure to use the same name, in this example we use my-pvc in your DAGs as well. See in the showcase-boiler.py how the volumnes are mounted like this:

+

volume_claim = k8s.V1PersistentVolumeClaimVolumeSource(claim_name="my-pvc")

+volume = k8s.V1Volume(name="my-volume", persistent_volume_claim=volume_claim)

+volume_mount = k8s.V1VolumeMount(name="my-volume", mount_path="/mnt/pvc")

+I hope this has illustrated how to create your own workspace. Otherwise let us know in the discussions or create an issue/PR.

+If you enconter errors, we collect them in Troubleshooting.

+ + + + + + + +

+

+

+

+

+

+

+ This is the open documentation about HelloDATA BE. We hope you enjoy it.

+Contribute

+In case something is missing or you'd like to add something, below is how you can contribute:

+HelloDATA BE is an enterprise data platform built on top of open-source tools based on the modern data stack. We use state-of-the-art tools such as dbt for data modeling with SQL and Airflow to run and orchestrate tasks and use Superset to visualize the BI dashboards. The underlying database is Postgres.

+Each of these components is carefully chosen and additional tools can be added in a later stage.

+These days the amount of data grows yearly more than the entire lifetime before. Each fridge, light bulb, or anything really starts to produce data. Meaning there is a growing need to make sense of more data. Usually, not all data is necessary and valid, but due to the nature of growing data, we must be able to collect and store them easily. There is a great need to be able to analyze this data. The result can be used for secondary usage and thus create added value.

+That is what this open data enterprise platform is all about. In the old days, you used to have one single solution provided; think of SAP or Oracle. These days that has completely changed. New SaaS products are created daily, specializing in a tiny little niche. There are also many open-source tools to use and get going with minutes freely.

+So why would you need a HelloDATA BE? It's simple. You want the best of both worlds. You want open source to not be locked-in, to use the strongest, collaboratively created product in the open. People worldwide can fix a security bug in minutes, or you can even go into the source code (as it's available for everyone) and fix it yourself—compared to an extensive vendor where you solely rely on their update cycle.

+But let's be honest for a second if we use the latest shiny thing from open source. There are a lot of bugs, missing features, and independent tools. That's precisely where HelloDATA BE comes into play. We are building the missing platform that combines the best-of-breed open-source technologies into a single portal, making it enterprise-ready by adding features you typically won't get in an open-source product. Or we fix bugs that were encountered during our extensive tests.

+Sounds too good to be true? Give it a try. Do you want to knot the best thing? It's open-source as well. Check out our GitHub HelloDATA BE.

+Want to run HelloDATA BE and test it locally? Run the following command in the docker-compose directory to deploy all components:

+ +Note: Please refer to our docker-compose README for more information; there are some must presets you need to configure.

+ + + + + + + +

+

+

+

+

+

+

+ Authentication and authorizations within the various logical contexts or domains of the HelloDATA system are handled as follows.

+Authentication is handled via the OAuth 2 standard. In the case of the Canton of Bern, this is done via the central KeyCloak server. Authorizations to the various elements within a subject or Data Domain are handled via authorization within the HelloDATA portal.

+To keep administration simple, a role concept is applied. Instead of defining the authorizations for each user, roles receive the authorizations and the users are then assigned to the roles. The roles available in the portal have fixed defined permissions.

In order for a user to gain access to a Business Domain, the user must be authenticated for the Business Domain.

+Users without authentication who try to access a Business Domain will receive an error message.

+The following two logical roles are available within a Business Domain:

BUSINESS_DOMAIN_ADMIN is automatically DATA_DOMAIN_ADMIN in all Data Domains within the Business Domain (see Data Domain Context).

+A Data Domain encapsulates all data elements and tools that are of interest for a specific issue.

+HalloDATA supports 1 - n Data Domains within a Business Domain.

The resources to be protected within a Data Domain are:

+The following three logical roles are available within a Data Domain:

+Depending on the role assigned, users are given different permissions to act in the Data Domain.

+A user who has not been assigned a role in a Data Domain will generally not be granted access to any resources of that Data Domain.

Same as DATA_DOMAIN_VIEWER plus:

+Same as DATA_DOMAIN_EDITOR plus:

+The DATA_DOMAIN_ADMIN role can view the airflow DAGs of the Data Domain.

+A DATA_DOMAIN_ADMIN can view all database objects in the DWH of the Data Domain.

Beside the standard Data Domains there are also extra Data Domains

+An Extra Data Domain provides additional permissions, functions and database connections such as :

These additional permissions, functions or database connections are a matter of negotiation per extra Data Domain.

+The additional permissions, if any, are then added to the standard roles mentioned above for the extra Data Domain.

Row Level Security settings on Superset level can be used to additionally restrict the data that is displayed in a dashboard (e.g. only data of the own domain is displayed).

+| + | + | + | + | + |

|---|---|---|---|---|

| System Role | +Portal Role | +Portal Permission | +Menu / Submenu / Page in Portal | +Info | +

| HELLODATA_ADMIN | +SUPERUSER | +ROLE_MANAGEMENT | +Administration / Portal Rollenverwaltung | ++ |

| + | + | MONITORING | +Monitoring | ++ |

| + | + | DEVTOOLS | +Dev Tools | ++ |

| + | + | USER_MANAGEMENT | +Administration / Benutzerverwaltung | ++ |

| + | + | FAQ_MANAGEMENT | +Administration / FAQ Verwaltung | ++ |

| + | + | EXTERNAL_DASHBOARDS_MANAGEMENT | +Unter External Dashboards | +Kann neue Einträge erstellen und verwalten bei Seite External Dashboards | +

| + | + | DOCUMENTATION_MANAGEMENT | +Administration / Dokumentationsmanagement | ++ |

| + | + | ANNOUNCEMENT_MANAGEMENT | +Administration/ Ankündigungen | ++ |

| + | + | DASHBOARDS | +Dashboards | +Sieht im Menu Liste, dann je einen Link auf alle Data Domains auf die er Zugriff hat mit deren Dashboards auf die er Zugriff hat plus Externe Dashboards | +

| + | + | DATA_LINEAGE | +Data Lineage | +Sieht im Menu je einen Lineage Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_MARTS | +Data Marts | +Sieht im Menu je einen Data Mart Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_DWH | +Data Eng, / DWH Viewer | +Sieht im Menu Data Eng. das Submenu DWH Viewer | +

| + | + | DATA_ENG | +Data Eng. / Orchestration | +Sieht im Menu Data Eng. das Submenu Orchestration | +

| + | + | + | + | + |

| BUSINESS_DOMAIN_ADMIN | +BUSINESS_DOMAIN_ADMIN | +USER_MANAGEMENT | +Administration / Portal Rollenverwaltung | ++ |

| + | + | FAQ_MANAGEMENT | +Dev Tools | ++ |

| + | + | EXTERNAL_DASHBOARDS_MANAGEMENT | +Administration / Benutzerverwaltung | ++ |

| + | + | DOCUMENTATION_MANAGEMENT | +Administration / FAQ Verwaltung | ++ |

| + | + | ANNOUNCEMENT_MANAGEMENT | +Unter External Dashboards | ++ |

| + | + | DASHBOARDS | +Administration / Dokumentationsmanagement | +Sieht im Menu Liste, dann je einen Link auf alle Data Domains auf die er Zugriff hat mit deren Dashboards auf die er Zugriff hat plus Externe Dashboards | +

| + | + | DATA_LINEAGE | +Administration/ Ankündigungen | +Sieht im Menu je einen Lineage Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_MARTS | +Data Marts | +Sieht im Menu je einen Data Mart Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_DWH | +Data Eng, / DWH Viewer | +Sieht im Menu Data Eng. das Submenu DWH Viewer | +

| + | + | DATA_ENG | +Data Eng. / Orchestration | +Sieht im Menu Data Eng. das Submenu Orchestration | +

| + | + | + | + | + |

| DATA_DOMAIN_ADMIN | +DATA_DOMAIN_ADMIN | +DASHBOARDS | +Dashboards | +Sieht im Menu Liste, dann je einen Link auf alle Data Domains auf die er Zugriff hat mit deren Dashboards auf die er Zugriff hat plus Externe Dashboards | +

| + | + | DATA_LINEAGE | +Data Lineage | +Sieht im Menu je einen Lineage Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_MARTS | +Data Marts | +Sieht im Menu je einen Data Mart Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_DWH | +Data Eng, / DWH Viewer | +Sieht im Menu Data Eng. das Submenu DWH Viewer | +

| + | + | DATA_ENG | +Data Eng. / Orchestration | +Sieht im Menu Data Eng. das Submenu Orchestration | +

| + | + | + | + | + |

| DATA_DOMAIN_EDITOR | +EDITOR | +DASHBOARDS | +Dashboards | +Sieht im Menu Liste, dann je einen Link auf alle Data Domains auf die er Zugriff hat mit deren Dashboards auf die er Zugriff hat plus Externe Dashboards | +

| + | + | DATA_LINEAGE | +Data Lineage | +Sieht im Menu je einen Lineage Link für alle Data Domains auf die er Zugriff hat | +

| + | + | DATA_MARTS | +Data Marts | +Sieht im Menu je einen Data Mart Link für alle Data Domains auf die er Zugriff hat | +

| + | + | + | + | + |

| DATA_DOMAIN_VIEWER | +VIEWER | +DASHBOARDS | +Dashboards | +Sieht im Menu Liste, dann je einen Link auf alle Data Domains auf die er Zugriff hat mit deren Dashboards auf die er Zugriff hat plus Externe Dashboards | +

| + | + | DATA_LINEAGE | +Data Lineage | +Sieht im Menu je einen Lineage Link für alle Data Domains auf die er Zugriff hat | +

| + | + | + |

|---|---|---|

| System Role | +Superset Role | +Info | +

| No Data Domain role | +Public | +User should not get access to Superset functions so he gets a role with no permissions. | +

| DATA_DOMAIN_VIEWER | +BI_VIEWER plus roles forDashboards he was granted access to i. e. the slugified dashboard names with prefix "D_" | +Example: User is "DATA_DOMAIN_VIEWER" in a Data Domain. We grant the user acces to the "Hello World" dashboard. Then user gets the role "BI_VIEWER" plus the role "D_hello_world" in Superset. | +

| DATA_DOMAIN_EDITOR | +BI_EDITOR | +Has access to all Dashboards as he is owner of the dashboards plus he gets SQL Lab permissions. | +

| DATA_DOMAIN_ADMIN | +BI_EDITOR plus BI_ADMIN | +Has access to all Dashboards as he is owner of the dashboards plus he gets SQL Lab permissions. | +

| + | + | + |

|---|---|---|

| System Role | +Airflow Role | +Info | +

| HELLO_DATA_ADMIN | +Admin | +User gets DATA_DOMAIN_ADMIN role for all exisitng Data Domains and thus gets his permissions by that roles. User additionally gets the Admin role. |

+

| BUSINESS_DOMAIN_ADMIN | ++ | User gets DATA_DOMAIN_ADMIN role for all exisitng Data Domains and thus gets his permissions by that roles. | +

| No Data Domain role | +Public | +User should not get access to Airflow functions so he gets a role with no permissions. | +

| DATA_DOMAIN_VIEWER | +Public | +User should not get access to Airflow functions so he gets a role with no permissions. | +

| DATA_DOMAIN_EDITOR | +Public | +User should not get access to Airflow functions so he gets a role with no permissions. | +

| DATA_DOMAIN_ADMIN | +AF_OPERATOR plus role corresponding to his Data Domain Key with prefix "DD_" | +Example: User is "DATA_DOMAIN_ADMIN" in a Data Domain with the key "data_domain_one". Then user gets the role "AF_OPERATOR" plus the role "DD_data_domain_one" in Airflow. | +

+

+

+

+

+

+

+

+ This use manual should enable you to use the HelloDATA platform and illustrate the features of the product and how to use them.

+→ More about the Platform and its architecture you can find on Architecture & Concepts.

+The entry page of HelloDATA is the Web Portal.

+

As explained in Domain View, a key feature is to create business domains with n-data domains. If you have access to more than one data domain, you can switch between them by clicking the drop-down at the top and switch between them.

The most important navigation button is the dashboard links. If you hover over it, you'll see three options to choose from.

+You can either click the dashboard list in the hover menu (2) to see the list of dashboards with thumbnails, or directly choose your dashboard (3).

+

To see the data lineage (dependencies of your data tables), you have the second menu option. Again, you chose the list or directly on "data lineage" (2).

+Button 2 will bring you to the project site, where you choose your project and load the lineage.

+

Once loaded, you see all sources (1) and dbt Projects (2). On the detail page, you can see all the beautiful and helpful documentation such as:

+ +

+ +

+

This view let's you access the universaal data mart (udm) layer:

+

These are cleaned and modeled data mart tables. Data marts are the tables that have been joined and cleaned from the source tables. This is effectively the latest layer of HelloDATA BE, which the Dashboards are accessing. Dashboards should not access any layer before (landing zone, data storage, or data processing).

+We use CloudBeaver for this, same as the DWH Viewer later.

+

This is essentially a database access layer where you see all your tables, and you can write SQL queries based on your access roles with a provided tool (CloudBeaver).

+ o

o

You can chose pre-defined connections and query your data warehouse. Also you can store queries that other user can see and use as well. Run your queries with (1).

+

You can set many settings, such as user status, and many more.

+ +Please find all setting and features in the CloudBeaver Documentation.

+Please find all setting and features in the CloudBeaver Documentation.

The orchestrator is your task manager. You tell Airflow, our orchestrator, in which order the task will run. This is usually done ahead of time, and in the portal, you can see the latest runs and their status (successful, failed, etc.).

+ +

+

Here you manage the portal configurations such as user, roles, announcements, FAQs, and documentation management.

+

First type your email and hit enter. Then choose the drop down and click on it.

+

Now type the Name and hit Berechtigungen setzen to add the user:

+

You should see something like this:

+

Now choose the role you want to give:

And or give access to specific data domains:

+

See more in role-authorization-concept.

+In this portal role management, you can see all the roles that exist.

+Warning

+Creating new roles are not supported, despite the fact "Rolle erstellen" button exists. All roles are defined and hard coded.

+

See how to create a new role below:

+

You can simply create an announcement that goes to all users by Ankündigung erstellen:

+

Then you fill in your message. Save it.

+ +You'll see a success if everything went well:

+

+You'll see a success if everything went well:

+

And this is how it looks to the users — It will appear until the user clicks the cross to close it.

+

The FAQ works the same as the announcements above. They are shown on the starting dashboard, but you can set the granularity of a data domain:

+

And this is how it looks:

+

Lastly, you can document the system with documentation management. Here you have one document that you can document everything in detail, and everyone can write to it. It will appear on the dashboard as well:

+

We provide two different ways of monitoring:

+

It will show you details information on instances of HelloDATA, how is the situation for the Portal, is the monitoring running, etc.

+

In Monitoring your data domains you see each system and the link to the native application. You can easily and quickly observer permission, roles and users by different subsystems (1). Click the one you want, and you can choose different levels (2) for each, and see its permissions (3).

+

By clicking on the blue underlined DBT Docs, you will be navigated to the native dbt docs. Same is true if you click on a Airflow or Superset instance.

DevTools are additional tools HelloDATA provides out of the box to e.g. send Mail (Mailbox) or browse files (FileBrowser).

+

You can check in Mailbox (we use MailHog) what emails have been sending or what accounts are updated.|

+

Here you can browse all the documentation or code from the git repos as file browser. We use FileBrowser here. Please use with care, as some of the folder are system relevant.

+Log in

+Make sure you have the login credentials to log in. Your administrator should be able to provide these to you.

+

Find further important references, know-how, and best practices on HelloDATA Know-How.

+ + + + + + + +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+ This is the open documentation about HelloDATA BE. We hope you enjoy it.

Contribute

In case something is missing or you'd like to add something, below is how you can contribute:

HelloDATA BE is an\u00a0enterprise data platform\u00a0built on top of open-source tools based on the modern data stack. We use state-of-the-art tools such as dbt for data modeling with SQL and Airflow to run and orchestrate tasks and use Superset to visualize the BI dashboards. The underlying database is Postgres.

Each of these components is carefully chosen and additional tools can be added in a later stage.

"},{"location":"#why-do-you-need-an-open-enterprise-data-platform-hellodata-be","title":"Why do you need an Open Enterprise Data Platform (HelloDATA BE)?","text":"These days the amount of data grows yearly more than the entire lifetime before. Each fridge, light bulb, or anything really starts to produce data. Meaning there is a growing need to make sense of more data. Usually, not all data is necessary and valid, but due to the nature of growing data, we must be able to collect and store them easily. There is a great need to be able to analyze this data. The result can be used for secondary usage and thus create added value.

That is what this open data enterprise platform is all about. In the old days, you used to have one single solution provided; think of SAP or Oracle. These days that has completely changed. New SaaS products are created daily, specializing in a tiny little niche. There are also many open-source tools to use and get going with minutes freely.

So why would you need a HelloDATA BE? It's simple. You want the best of both worlds. You want\u00a0open source\u00a0to not be locked-in, to use the strongest, collaboratively created product in the open. People worldwide can fix a security bug in minutes, or you can even go into the source code (as it's available for everyone) and fix it yourself\u2014compared to an extensive vendor where you solely rely on their update cycle.

But let's be honest for a second if we use the latest shiny thing from open source. There are a lot of bugs, missing features, and independent tools. That's precisely where HelloDATA BE comes into play. We are building the\u00a0missing platform\u00a0that combines the best-of-breed open-source technologies into a\u00a0single portal, making it enterprise-ready by adding features you typically won't get in an open-source product. Or we fix bugs that were encountered during our extensive tests.

Sounds too good to be true? Give it a try. Do you want to knot the best thing? It's open-source as well. Check out our\u00a0GitHub HelloDATA BE.

"},{"location":"#quick-start-for-developers","title":"Quick Start for Developers","text":"Want to run HelloDATA BE and test it locally? Run the following command in the docker-compose directory to deploy all components:

cd hello-data-deployment/docker-compose\ndocker-compose up -d\nNote: Please refer to our docker-compose README for more information; there are some must presets you need to configure.

"},{"location":"architecture/architecture/","title":"Architecture","text":""},{"location":"architecture/architecture/#components","title":"Components","text":"This chapter will explain the core architectural component, its context views, and how HelloDATA works under the hood.

"},{"location":"architecture/architecture/#domain-view","title":"Domain View","text":"We separate between two main domains, \"Business and Data Domain.

"},{"location":"architecture/architecture/#business-vs-data-domain","title":"Business\u00a0vs. Data Domain","text":"Resources encapsulated inside a\u00a0Data Domain\u00a0can be:

On top, you can add\u00a0subsystems. This can be seen as extensions that make HelloDATA pluggable with additional tools. We now support\u00a0CloudBeaver\u00a0for viewing your Postgres databases, RtD, and Gitea. You can imagine adding almost infinite tools with capabilities you'd like to have (data catalog, semantic layer, specific BI tool, Jupyter Notebooks, etc.).

Read more about Business and Data Domain access rights in\u00a0Roles / Authorization Concept.

"},{"location":"architecture/architecture/#data-domain","title":"Data Domain","text":"Zooming into several Data Domains that can exist within a Business domain, we see an example of Data Domain A-C. Each Data Domain has a persistent storage, in our case, Postgres (see more details in the\u00a0Infrastructure Storage\u00a0chapter below).

Each data domain might import different source systems; some might even be used in several data domains, as illustrated. Each Data Domain is meant to have its data model with straightforward to, in the best case, layered data models as shown on the image with:

"},{"location":"architecture/architecture/#landingstaging-area","title":"Landing/Staging Area","text":"Data from various source systems is first loaded into the Landing/Staging Area.

It must be cleaned before the delivered data is loaded into the Data Processing (Core). Most of these cleaning steps are performed in this area.

The data from the different source systems are brought together in a central area, the Data Processing (Core), through the Landing and Data Storage and stored there for extended periods, often several years.\u00a0

Subsets of the data from the Core are stored in a form suitable for user queries.\u00a0

Between the layers, we have lots of\u00a0Metadata

Different types of metadata are needed for the smooth operation of the Data Warehouse. Business metadata contains business descriptions of all attributes, drill paths, and aggregation rules for the front-end applications and code designations. Technical metadata describes, for example, data structures, mapping rules, and parameters for ETL control. Operational metadata contains all log tables, error messages, logging of ETL processes, and much more. The metadata forms the infrastructure of a DWH system and is described as \"data about data\".

"},{"location":"architecture/architecture/#example-multiple-superset-dashboards-within-a-data-domain","title":"Example: Multiple Superset Dashboards within a Data Domain","text":"Within a Data Domain, several users build up different dashboards. Think of a dashboard as a specific use case e.g., Covid, Sales, etc., that solves a particular purpose. Each of these dashboards consists of individual charts and data sources in superset. Ultimately, what you see in the HelloDATA portal are the dashboards that combine all of the sub-components of what Superset provides.

"},{"location":"architecture/architecture/#portalui-view","title":"Portal/UI View","text":"As described in the intro. The portal is the heart of the HelloDATA application, with access to all critical applications.

Entry page of helloDATA: When you enter the portal for the first time, you land on the dashboard where you have

More technical details are in the \"Module deployment view\" chapter below.

"},{"location":"architecture/architecture/#module-view-and-communication","title":"Module View and Communication","text":""},{"location":"architecture/architecture/#modules","title":"Modules","text":"Going one level deeper, we see that we use different modules to make the portal and helloDATA work.\u00a0

We have the following modules:

At the center are two components, NATS and Keycloak.\u00a0Keycloak, together with the HelloDATA portal, handles the authentication, authorization, and permission management of HelloDATA components. Keycloak is a powerful open-source identity and access management system. Its primary benefits include:

On the other hand, NATS is central for handling communication between the different modules. Its power comes from integrating modern distributed systems. It is the glue between microservices, making and processing statements, or stream processing.

NATS focuses on hyper-connected moving parts and additional data each module generates. It supports location independence and mobility, whether the backend process is streaming or otherwise, and securely handles all of it.

NATs let you connect mobile frontend or microservice to connect flexibly. There is no need for static 1:1 communication with a hostname, IP, or port. On the other hand, NATS lets you m:n connectivity based on subject instead. Still, you can use 1:1, but on top, you have things like load balancers, logs, system and network security models, proxies, and, most essential for us,\u00a0sidecars. We use sidecars heavily in connection with NATS.

NATS can be\u00a0deployed\u00a0nearly anywhere: on bare metal, in a VM, as a container, inside K8S, on a device, or in whichever environment you choose. And all fully secure.

"},{"location":"architecture/architecture/#subsystem-communication","title":"Subsystem communication","text":"Here is an example of subsystem communication. NATS, obviously at the center, handles these communications between the HelloDATA platform and the subsystems with its workers, as seen in the image below.

The HelloDATA portal has workers.\u00a0These workers are deployed as extra containers with sidecars, called \"Sidecar Containers\". Each module needing communicating needs a sidecar with these workers deployed to communicate with NATS. Therefore, the subsystem itself has its workers to share with NATS as well.

"},{"location":"architecture/architecture/#messaging-component-workers","title":"Messaging component workers","text":"Everything starts with a\u00a0web browser\u00a0session. The HelloDATA user accesses the\u00a0HelloDATA Portal\u00a0through HTTP. Before you see any of your modules or components, you must authorize yourself again, Keycloak. Once logged in, you have a Single Sign-on Token that will give access to different business domains or data domains depending on your role.

The HelloDATA portal sends an event to the EventWorkers via JDBC to the Portal database.\u00a0The\u00a0portal database\u00a0persists settings from the portal and necessary configurations.

The\u00a0EventWorkers, on the other side communicate with the different\u00a0HelloDATA Modules\u00a0discussed above (Keycloak, NATS, Data Stack with dbt, Airflow, and Superset) where needed. Each module is part of the domain view, which persists their data within their datastore.

"},{"location":"architecture/architecture/#flow-chart","title":"Flow Chart","text":"In this flow chart, you see again what we discussed above in a different way. Here, we\u00a0assign a new user role. Again, everything starts with the HelloDATA Portal and an existing session from Keycloak. With that, the portal worker will publish a JSON message via UserRoleEvent to NATS. As\u00a0the\u00a0communication hub for HelloDATA, NATS knows what to do with each message and sends it to the respective subsystem worker.

Subsystem workers will execute that instruction and create and populate roles on, e.g., Superset and Airflow, and once done, inform the spawned subsystem worker that it's done. The worker will push it back to NATS, telling the portal worker, and at the end, will populate a message on the HelloDATA portal.

"},{"location":"architecture/architecture/#building-block-view","title":"Building Block View","text":""},{"location":"architecture/data-stack/","title":"Data Stack","text":"We'll explain which data stack is behind HelloDATA BE.

"},{"location":"architecture/data-stack/#control-pane-portal","title":"Control Pane - Portal","text":"The\u00a0differentiator of HelloDATA\u00a0lies in the Portal. It combines all the loosely open-source tools into a single control pane.

The portal lets you see:

You can find more about the navigation and the features in the\u00a0User Manual.

"},{"location":"architecture/data-stack/#data-modeling-with-sql-dbt","title":"Data Modeling with SQL - dbt","text":"dbt\u00a0is a small database toolset that has gained immense popularity and is the facto standard for working with SQL. Why, you might ask? SQL is the most used language besides Python for data engineers, as it is\u00a0declarative and easy to learn the basics, and many business analysts or people working with Excel or similar tools might know a little already.

The declarative approach is handy as you only define the\u00a0what, meaning you determine what columns you want in the SELECT and which table to query in the FROM statement. You can do more advanced things with WHERE, GROUP BY, etc., but you do not need to care about the\u00a0how. You do not need to watch which database, which partition it is stored, what segment, or what storage. You do not need to know if an index makes sense to use. All of it is handled by the\u00a0query optimizer\u00a0of Postgres (or any database supporting SQL).

But let's face it: SQL also has its downside. If you have worked extensively with SQL, you know the spaghetti code that usually happens when using it. It's an issue because of the repeatability\u2014no\u00a0variable\u00a0we can set and reuse in an SQL. If you are familiar with them, you can achieve a better structure with\u00a0CTEs, which allows you to define specific queries as a block to reuse later. But this is only within one single query and handy if the query is already log.

But what if you'd like to define your facts and dimensions as a separate query and reuse that in another query? You'd need to decouple the queries from storage, and we would persist it to disk and use that table on disk as a FROM statement for our following query. But what if we change something on the query or even change the name we won't notice in the dependent queries? And we will need to find out which queries depend on each other. There is no\u00a0lineage\u00a0or dependency graph.

It takes a lot of work to be organized with SQL. There is also not a lot of support if you use a database, as they are declarative. You need to make sure how to store them in git or how to run them.

That's where dbt comes into play. dbt lets you\u00a0create these dependencies within SQL. You can declaratively build on each query, and you'll get errors if one changes but not the dependent one. You get a lineage graph (see an\u00a0example), unit tests, and more. It's like you have an assistant that helps you do your job. It's added software engineering practice that we stitch on top of SQL engineering.

The danger we need to be aware of, as it will be so easy to build your models, is not to make 1000 of 1000 tables. As you will get lots of errors checked by the pre-compiling dbt, \u00a0good data modeling techniques are essential to succeed.

Below, you see dbt docs, lineage, and templates: 1. Project Navigation 2. Detail Navigation 3. SQL Template 4. SQL Compiled (practical SQL that gets executed) 5. Full Data lineage where with the source and transformation for the current object

Or zoom dbt lineage (when clicked):

"},{"location":"architecture/data-stack/#task-orchestration-airflow","title":"Task Orchestration - Airflow","text":"Airflow\u00a0is the natural next step. If you have many SQLs representing your business metrics, you want them to run on a daily or hourly schedule triggered by events. That's where Airflow comes into play. Airflow is, in its simplest terms, a task or workflow scheduler, which tasks or\u00a0DAGs\u00a0(how they are called) can be written programatically with Python. If you know\u00a0cron\u00a0jobs, these are the lowest task scheduler in Linux (think * * * * *), but little to no customization beyond simple time scheduling.

Airflow is different. Writing the DAGs in Python allows you to do whatever your business logic requires before or after a particular task is started. In the past, ETL tools like Microsoft SQL Server Integration Services (SSIS) and others were widely used. They were where your data transformation, cleaning and normalisation took place. In more modern architectures, these tools aren\u2019t enough anymore. Moreover, code and data transformation logic are much more valuable to other data-savvy people (data anlysts, data scientists, business analysts) in the company instead of locking them away in a propreitary format.

Airflow or a general Orchestrator ensures correct execution of depend tasks. It is very flexibile and extensible with operators from the community or in-build capabiliities of the framework itself.

"},{"location":"architecture/data-stack/#default-view","title":"Default View","text":"Airflow DAGs - Entry page which shows you the status of all your DAGs - what's the schedule of each job - are they active, how often have they failed, etc.

Next, you can click on each of the DAGs and get into a detailed view:

"},{"location":"architecture/data-stack/#airflow-operations-overview-for-one-dag","title":"Airflow operations overview for one DAG","text":"It shows you the dependencies of your business's various tasks, ensuring that the order is handled correctly.

"},{"location":"architecture/data-stack/#dashboards-superset","title":"Dashboards - Superset","text":"Superset\u00a0is the entry point to your data. It's a popular open-source business intelligence dashboard tool that visualizes your data according to your needs.\u00a0It's able to handle all the latest chart types. You can combine them into dashboards filtered and drilled down as expected from a BI tool. The access to dashboards is restricted to authenticated users only. A user can be given view or edit rights to individual dashboards using roles and permissions. Public access to dashboards is not supported.

"},{"location":"architecture/data-stack/#example-dashboard","title":"Example dashboard","text":""},{"location":"architecture/data-stack/#supported-charts","title":"Supported Charts","text":"(see live in action)

"},{"location":"architecture/data-stack/#storage-layer-postgres","title":"Storage Layer - Postgres","text":"Let's start with the storage layer. We use Postgres, the currently\u00a0most used and loved database. Postgres is versatile and simple to use. It's a\u00a0relational database\u00a0that can be customized and scaled extensively.

"},{"location":"architecture/infrastructure/","title":"Infrastructure","text":"Infrastructure is the part where we go into depth about how to run HelloDATA and its components on\u00a0Kubernetes.

"},{"location":"architecture/infrastructure/#kubernetes","title":"Kubernetes","text":"Kubernetes and its platform allow you to run and orchestrate container workloads. Kubernetes has become\u00a0popular\u00a0and is the\u00a0de-facto standard\u00a0for your cloud-native apps to (auto-)\u00a0scale-out\u00a0and deploy the various open-source tools fast, on any cloud, and locally. This is called cloud-agnostic, as you are not locked into any cloud vendor (Amazon, Microsoft, Google, etc.).

Kubernetes is\u00a0infrastructure as code, specifically as YAML, allowing you to version and test your deployment quickly. All the resources in Kubernetes, including Pods, Configurations, Deployments, Volumes, etc., can be expressed in a YAML file using Kubernetes tools like HELM. Developers quickly write applications that run across multiple operating environments. Costs can be reduced by scaling down and using any programming language running with a simple Dockerfile. Its management makes it accessible through its modularity and abstraction; also, with the use of Containers, you can monitor all your applications in one place.

Kubernetes\u00a0Namesspaces\u00a0provides a mechanism for isolating groups of resources within a single cluster. Names of resources need to be unique within a namespace but not across namespaces. Namespace-based scoping is applicable only for namespaced\u00a0objects (e.g. Deployments, Services, etc)\u00a0and not for cluster-wide objects\u00a0(e.g., StorageClass, Nodes, PersistentVolumes, etc).

Here, we have a look at the module view with an inside view of accessing the\u00a0HelloDATA Portal.

The Portal API serves with\u00a0SpringBoot,\u00a0Wildfly\u00a0and\u00a0Angular.

"},{"location":"architecture/infrastructure/#storage-data-domain","title":"Storage (Data Domain)","text":"Following up on how storage is persistent for the\u00a0Domain View\u00a0introduced in the above chapters.\u00a0

"},{"location":"architecture/infrastructure/#data-domain-storage-view","title":"Data-Domain Storage View","text":"Storage is an important topic, as this is where the business value and the data itself are stored.

From a Kubernetes and deployment view, everything is encapsulated inside a Namespace. As explained in the above \"Domain View\", we have different layers from one Business domain (here Business Domain) to n (multiple) Data Domains.\u00a0

Each domain holds its data on\u00a0persistent storage, whether Postgres for relational databases, blob storage for files or file storage on persistent volumes within Kubernetes.

GitSync is a tool we added to allow\u00a0GitOps-type deployment. As a user, you can push changes to your git repo, and GitSync will automatically deploy that into your cluster on Kubernetes.

"},{"location":"architecture/infrastructure/#business-domain-storage-view","title":"Business-Domain Storage View","text":"Here is another view that persistent storage within Kubernetes (K8s) can hold data across the Data Domain. If these\u00a0persistent volumes\u00a0are used to store Data Domain information, it will also require implementing a backup and restore plan for these data.

Alternatively, blob storage on any\u00a0cloud vendor or services\u00a0such as Postgres service can be used, as these are typically managed and come with features such as backup and restore.

"},{"location":"architecture/infrastructure/#k8s-jobs","title":"K8s Jobs","text":"HelloDATA uses Kubernetes jobs to perform certain activities

"},{"location":"architecture/infrastructure/#cleanup-jobs","title":"Cleanup Jobs","text":"Contents:

HelloDATA can be operated as different platforms, e.g. development, test, and/or production platforms. The deployment is based on common CICD principles. It uses GIT and flux internally to deploy its resources onto the specific Kubernetes clusters. In case of resource shortages, the underlying platform can be extended with additional resources upon request. Horizontal scaling of the infrastructure can be done within the given resources boundaries (e. g. multiple pods for Superset.)

"},{"location":"architecture/infrastructure/#platform-authentication-authorization","title":"Platform Authentication Authorization","text":"See at\u00a0Roles and authorization concept.

"},{"location":"concepts/showcase/","title":"Showcase: Animal Statistics (Switzerland)","text":""},{"location":"concepts/showcase/#what-is-the-showcase","title":"What is the Showcase?","text":"It's the demo cases of HD-BE, it's importing animal data from an external source and loading them with Airflow, modeled with dbt, and visualized in Superset.

It hopefully will show you how the platform works and it comes pre-installed with the docker-compose installation.