- Installed kubectl binary using curl: https://kubernetes.io/docs/tasks/tools/install-kubectl/

- Have secure boot disabled and virtualization enabled from bios

- Installed virtualBox and minikube: https://kubernetes.io/docs/tasks/tools/install-minikube/

- Installed kubectl completion for fish shell: https://github.com/evanlucas/fish-kubectl-completions

minikube versionminikube start- Now I have a running Kubernetes clster. Minikube started a virtual machine and a Kubernetes cluster is now running in that VM.

minikube dashboard- shows gui

- The cluster can be interacted with using the kubectl CLI. This is the main approach used for managing Kubernetes and the applications running on top of the cluster.

kubectl cluster-info- shows links

kubectl cluster-info dump- further diagnose in terminal

kubectl get nodes- This command shows all nodes that can be used to host our applications. Now we have only one node, and we can see that it’s status is ready (it is ready to accept applications for deployment).

- Before: applications were installed directly onto specific machines as packages deeply integrated into the host. Now: make the applications containerized. Deploy them into a cluster of machines.

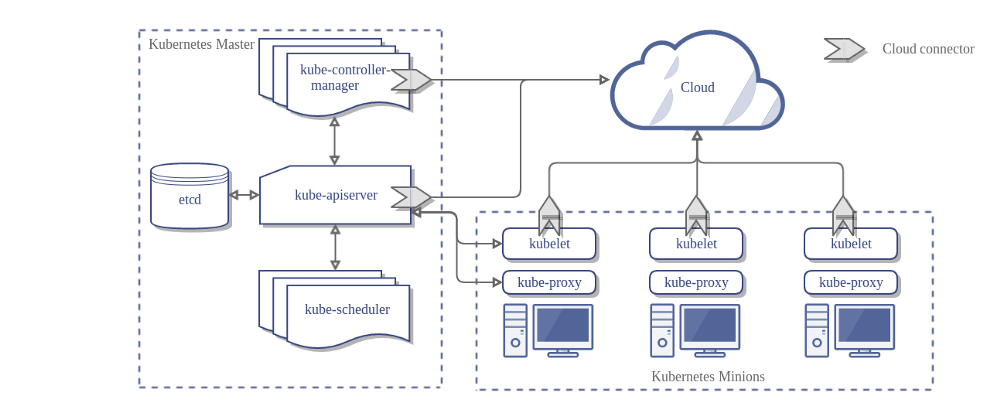

- Master coordinates the cluster - schedules applications, maintains desired state, scales app and rolls out new updates.

- Nodes are the workers that run applications. It's a VM or a physical computer that serves as a worker machine in a Kubernetes cluster. It has a kubelet, that manages the node and communicates with master. Each node has docker/rkt too. A cluster has at least three nodes.

- To deploy an application, you tell the master the start app containers. The master schedules the containers to run on the nodes. The nodes communicates with the master using k8s api, which the master exposes. Users can also communicate withe master using the api.

- A k8s cluster can be deployed on either physical or vm. We can use minikube to make a k8s cluster on local machine, which has only one node.

minikube versionensure that minikube is installedminikube start- minikube created a virtual machine and a k8s cluster is running in that vm.kubectl version- it provides an interface to manage k8s. This command checks if kubectl is installed properly.kubectl cluster-info- shows the cluster detailskubectl get nodes- shows all the nodes that can be used to host apps. Now in our local machine, we have only one node and we can it's status ready and it's role is master

- Now you can deploy apps on top of the cluster. You need to create a Deployment Configuration. This instructs how to create and update instances of your app. Then Master schedules the instances onto individual Nodes.

- Once instances of the app are created, a Kubernetes Deployment Controller continuously monitors these instances. If a Node containing an instances goes down, the Deployment controller replaces it. This provides a self-healing mechanism.

- Before: scripts were used to start the app, but doesn't help to recover. Now: Controller keeps them running.

- kubectl is a command line interface that communicates with the cluster using the k8s api.

- To deploy, you need to specify the container image and number of replicas to run. You can change the info by updating the Deployment.

kubectl run <deployment-name> --image=<image-name> --port=<8080-or-something-else>kubectl runcommand creates a new Deployment. It need deployment name and app image location (if the image aren't hosted on DockerHub, needs to include full repo url). Also needs specific port to run- this command searched for a Node where an instance of the app could be run

- scheduled the app to run on that Node

- configured the cluster to reschedule the instance on a new node when needed

- Q: fahim couldn't run the deployment because he didn't have

EXPOSEin his Dockerfile. After adding the line, deployment ran. And--portvalue inkubectl runcommand doesn't have to matchEXPOSEvalue. Why? kubectl run busyboxkube --image=busyboxpods doesn't run because the os container have nothing to do - it will be inRunningstatus as long as it has something to do - Shudipta added a infinite loop and it ran and you can runkubectl exec -it <pod-name> shand run various comamnds on sh.

kubectl get deploymentsshows the deployments, their instances and state.kubectl proxycreates a proxy - so far kubectl were communicating with k8s cluster using api, but after proxy command we can communicate with k8s api too (through browser or curl)

- A pod represents one or more containers and has some shared storage as Volumes, networking as a unique cluster IP address, information about each container such as image version or which ports to use.

- A pod models an application-specific "logical host" and can contain different application which are relatively tightly coupled.

- The contains in same Pod share an IP address and port space - co-located/co-scheduled/runs-in-same-context

- Pods are atomic unit on K8s platform. A deployment creates Pods with containers inside them. Each pod is tied to its Node, where it is scheduled. When a Node fails, identical pods are scheduled on other available Nodes in the cluster.

- One node is either one physical machine (laptop) or virtual machine (I can create more than one node in my laptop with VM). A node can have multiple pods and k8s master automatically handles scheduling the pods across the Nodes in the cluster, based on the resources available on each Node.

- One node has kubelet - a process responsible for communicating with the Master. It manages pods and containers.

- Node contains container runtime too (Docker/rkt) which pulls the container image, unpacks the container and runs the pp.

kubectl get podslists pods.kubectl describe podsshows details (yaml?)kubectl proxy- As pods are running in an isolated, private network - we need to proxy access to them so we can interact with them.kubectl proxy --port=3456adds a port- default is 8001

curl localhost:8001from browser

export POD_NAME=$(kubectl get pods -o go-template --template '{{range .items}}{{.metadata.name}}{{"\n"}}{{end}}')- QJenny

curl http://localhost:<mentioned-or-default-port>/api/v1/namespaces/default/pods/$POD_NAME/proxy/(from my laptop)- QJenny - shows yaml-like things

kubectl logs <pod-name>- Anything that the application would normally send to STDOUT becomes logs for the container within the Pod (ommitted name of container as we have only one container now).-fflag continues to watchkubectl exec <pod-name> <command>- we can execute commands directly on the container once the Pod is up and running. (here we ommitted the container name as there's only one container in our pod)kubectl exec -it <pod-name> bash- lets us use the bash inside the container(again, we have one container)- we can use

curl localhost:8080to from inside the container (after accessing bash of the pod)- as we used

4321in our dockerfile - we would uselocalhost:4321 --target-portis4321

- as we used

- Pods are mortal. They have a lifecycle. If a node dies, pods running on that node are lost too.

- Replication Controller creates new pods when that happens (by rescheduling the pod on available nodes).

- Pods have unique IP across a K8s cluster.

- Front-end shouldn't care about backend replicas or if a pod is lost and created.

- A service in K8s defines a logical set of Pods and a policy by to access them. Service is defined using YAML.

- The set of Pods targeted by a Service is usually determined by a

LabelSelector - Unique IP addresses are not exposed to outside cluster with a Service. Services can be exposed in different ways by specifying a type in the ServiceSpec.

ClusterIP(default) exposes the service on an internal IP in the cluster. This makes the Service onlu reachable from within the cluster.NodePortexposes the Service on the same port of each selected Node in the cluster using NAT. Makes a service accessible from outside the cluster using<NodeIP>:<NodePort. Superset of ClusterIP.LoadBalancercreates an external load balancer in the current cloud (if supported) and assigns a fixed, external IP to the Service. Superset of NodePort.ExternalNameexposes the service using an arbitrary name (specified byexternalNamein the spec) by returning aCNAMErecord with the name. No proxy is used. This type requires v1.7 or higher ofkube-dns

- A service created without selector will also not create the corresponding Endpoints object. This allows users to manually map a Service to specific endpoints. Another possibility why there maybe no selector is you are strictly using

type:ExternalName

- Service routes traffic for a set of pods and allows pods to die and replicate in k8s without impacting the app (services exposes pods through a common port(in case of node port) - so an application sees the service - if a node/pod is down service is recreating those pods internally - application is not harmed)

- Labels are key/value pairs attached to objects. It can attached to objects at creation or later on.

- A Service can be created at time of deployment by using

--exposein kubectl. - A Service is created by default when minikube starts the cluster.

kubectl get serviceskubectl expose deployment/<deployment-name> --type="NodePort" --port 4321created a new service and exposed it as NodePort type. minikube doesn't support LoadBalancer yet.- when we are mentioning

--portand not mentioning--target-port, target-port takes the --port by default - my

EXPOSEin Dockerfile is4321, we can access the service only if--target-portis4321 - services works on pods - a service can have pods from multiple nodes

- when we are mentioning

kubectl describe services/<service-name>shows detailsexport NODE_PORT=$(kubectl get services/kubernetes-bootcamp -o go-template='{{(index .spec.ports 0).nodePort}}')curl $(minikube ip):$NODE_PORTminikube ipshows the node-ip- browse

192.168.99.100:30250

kubectl describe deploymentshows name of the label among many other infoskubectl get pods -l run=<pod-label>kubectl get pods -l run=booklistkube2

kubectl get services -l run=<service-label>kubectl get services -l run=booklistkube2

export POD_NAME=$(kubectl get pods -o go-template --template '{{range .items}}{{.metadata.name}}{{"\n"}}{{end}}')- QJenny

kubectl label pod <pod-name> app=<varialbe-name>applies a new label (pinned the application version to the pod)kubectl describe pods <pod-name>- if we use

-lflag,use <pod-label> - otherwise, use

<pod-name> - same for

kubectl get pods

- if we use

kubectl describe pods -l app=<app-label-namekubectl delete service -l run=<label-name>orapp=<label-namedeletes a service- or

kubectl delete service <service-name

- or

kubectl label pod <pod-name> app=v2 --overwriteoverwrites a label namekubectl label service <service-name> app=<label-namewe can add service label too! (:D starting to get the label-things)kubectl edit service <service-name> -o yamlyou can edit details of the service-o yamlcan be ommitted- used

--port=8080- later saw that--target-portgot the same from this comamnd and edited target-port(only) to4321and it worked

kubectl get service <service-name> -o yamlshows the yamlkubectl get deployment <deployment-name> -o yamlkubectl edit deployment <deployment-name> -o yaml-o yamlcan be ommitted

kubectl get pod <pod-name> -o yamlkubectl edit pod <pod-name> -o yaml-o yamlcan be ommitted

kubectl exec -it <pod-name> sh- thenwget localhost:4321(--target-port)kubectl exec -it <pod-name> wget localhost:4321

- Scaling is accomplished by changing the number of replicas in a Deployment.

- Scaling will increase the number of Pods to the new desired state - schedules them to nodes with available resources. Scaling to zero will terminate all pods.

- Services have an intergrated load balancer that will distribute network traffic to all pods of an exposed deployment. Services will monitor continuously the running pods using endpoints, to ensure the traffic is sent only to available pods.

kubectl get deploycommand has- DESIRED shows the configured number of replicas

- CURRENT shows how many replicas are running now

- UP-TO-DATE shows the number of replicas that were updated to match the desired state

- AVAILABLE state shows the number of replicas AVAILABLE to the users

kubectl scale deploy/booklistkube2 --replicas=4makes the number of pods to 4kubectl get pods -o widewill also show IP and NODEkubectl get deploy booklistkube2will now show the scaling up eventkubectl describe services <service-name>shows 4 endpoints asIP:4321(4 different IP and one common port).- creating replicas will automatically be added to the service

- open

kubectl get pods -w,kubectl get deploy -w,kubectl get replicaset -win 3 pane and scale/edit a deployment in another pane and see the changes :D- replicaset name + different extension = different pod name

- scaling up/down doesn't change the replicaset name - because old pods are useful. but updating deployment replaces every pod - so new replicaset name and pod name.

- Rolling updates allow deployments' update to take place with zero downtime by incrementally updating pods instances with new ones.

- By default, the max no of pods that can be unavailable during the update and the max no of new pods that can be created, is one. Both options can be configured to either number or percentage. Updates are versioned and can be reverted back to previous version.

- Similar to application Scaling, if a deployment is exposed publicly, the Service will load-balance the traffic only to available Pods during the update.

kubectl set image deploy/booklistkube2 <container-name>=<new-image-name>changes imagekubectl rollout status deploy/booklistkube2shows rollout statuskubectl rollout undo deploy/booklistkube2reverts back to previous versionkubectl get eventskubectl config view

- https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/

- K8s Master is collection of these process: kube-apiserver, kube-controller, kube-scheduler

- Each non-master node has two processes:

- kubelet, which communicates with the K8s Master

- kube-proxy, a network proxy which reflects K8s networking services on each node

- Various parts of the K8s Control Plane, such as the K8s Master and kubelet processes, govern how K8s communicates with your cluster.

- Open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.

- A container platform/a microservices platform/a portable cloud platform and a lot more.

- [Google's Borg system is a cluster manager that runs hundreds of thousands of jobs, from many thousands of different applications, across a number of clusters each with up to tens of thousands of machines.]

- Continuous Integration, Delivery, and Deployment (CI/CD)

- Kubernetes is not a mere orchestration system. In fact, it eliminates the need for orchestration. The technical definition of orchestration is execution of a defined workflow: first do A, then B, then C. In contrast, Kubernetes is comprised of a set of independent, composable control processes that continuously drive the current state towards the provided desired state. It shouldn’t matter how you get from A to C. Centralized control is also not required.

- why containers?

- The Old Way to deploy applications was to install the applications on a host using the operating-system package manager. This had the disadvantage of entangling the applications’ executables, configuration, libraries, and lifecycles with each other and with the host OS. One could build immutable virtual-machine images in order to achieve predictable rollouts and rollbacks, but VMs are heavyweight and non-portable.

- The New Way is to deploy containers based on operating-system-level virtualization rather than hardware virtualization. These containers are isolated from each other and from the host: they have their own filesystems, they can’t see each others’ processes, and their computational resource usage can be bounded. They are easier to build than VMs, and because they are decoupled from the underlying infrastructure and from the host filesystem, they are portable across clouds and OS distributions.

- Containers are small and fast. So one-to-one application-to-image relation if built.

- The name Kubernetes originates from Greek, meaning helmsman or pilot, and is the root of governor and cybernetic. K8s is an abbreviation derived by replacing the 8 letters

ubernetewith8

- Master components provide the cluster's control plane - makes global decisions, e.g. scheduling, detecting, responding

- Master components can be run on any machine in the cluster - usually set up scripts start all master components on the same machine and do not run user containers on this machine.

kube-apiserverexposes k8s api - front end for k8s control plane. designed to scale horizontally, that is - it scales by deploying more instances (QJenny - vertical scaling is creating new replicas with increased resources?)etcdis key value store used as k8s' backing store for all cluster data.kube-schedulerschedules newly created pods to nodes based on individual and collective resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interface and deadlines.kube-controller managerruns controllers. Logically, each controller is a seperate process, but to reduce complexity, they are all compiled into a single binary and run in a single processNode Controlleris responsible for noticing and responding when nodes go downReplication Controlleris responsible for maintaining correct number of podsEndpoints Controllerpopulates the Endpoints object (joins Services and Pods)Service Account & Token Controllerscreate default accounts and API access tokens for new namespaces.

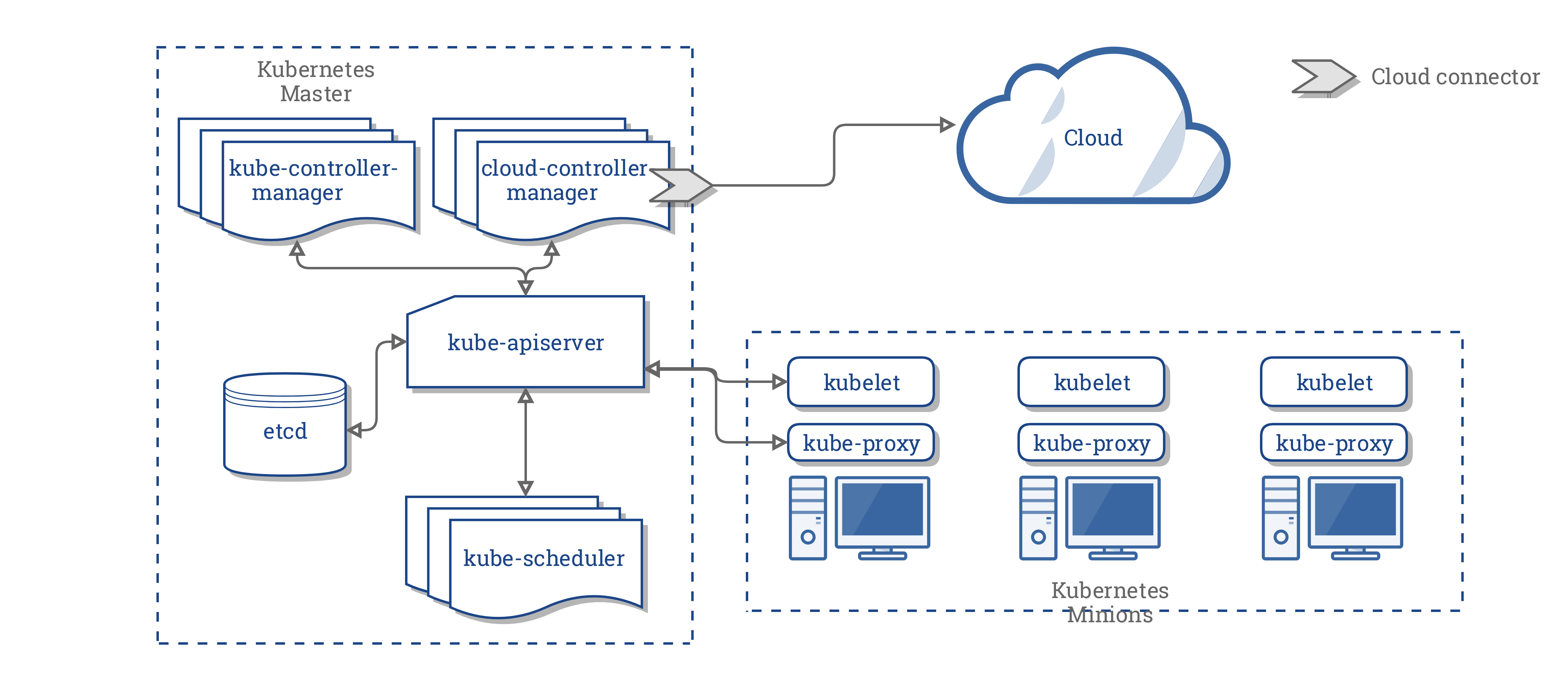

cloud-controller-managerruns controllers that interact with the underlying cloud providers. It runs cloud-provider-specific controller loops only, must disable these controller loops in the kube-controller-manager by setting--cloud-providerflag toexternal. It allows cloud vendors code and k8s core to evolve independent of each other. In prior releases, the core k8s code was dependent upon cloud-provider-specific code for functionality. In future releases, code specific to cloud vendors should be maintained by the cloud vendor themselves, and linked to cloud-controller-manager while running k8s. These controllers have cloud provider dependencies:Node Controllerfor checking the cloud provider to determine if a node has been deleted in the cloud after it stops responding.Route Controllerfor setting up routes in the underlying cloud infrastructureService Controllerfor creating, updating and deleting cloud provider load balancersVolume Controllerfor creating, attaching and mounting volumes, and interacting with the cloud provider to orchestrate volumes

kubeletruns on each in the cluster, takes a set of PodSpecs and ensures that the containers are running and healthy.kube-proxyenables k8s service abstraction by maintaining network rules on the host and performing connection forwardingContainer Runtimeis the software that is responsible for running containers, e.g. Docker, rkt, runc, any OCI runtime-spec implementation

- Addons are pods and services that implement cluster features. They may be managed by Deployments, ReplicationControllers. Namespaced addon objects are created in

kube-systemnamespace.- UJenny

DNS- all k8s clusters should have cluster DNS, as many examples rely on it. Cluster DNS is DNS server, in addition to the other DNS server(s) in your environment, which serves DNS records for k8s services. Containers started by k8s automatically include this DNS server in their DNS searches- UJenny

Web UI(Dashboard)Container Resource Monitoringrecords generic time-series metrics about containers in a central database and provides a UI for browsing that data- UJenny

Cluster-level Loggingis responsible for saving container logs to central log store with search/browsing interface- UJenny

- https://kubernetes.io/docs/concepts/overview/kubernetes-api/

- UJenny

- API continuously changes

- to make it easier to eliminate fields or restructure resource representation, k8s supports multiple API versions, each at a different API path, such as

/api/v1or/apis/extensions/v1beta1 - Alpha: e.g,

v1alpha1. may be buggy. - Beta: e.g,

v2beta3. code is well tested - Stable: e.g,

v1. will appear in released software.

- K8s objects represent the state of a cluster. They can describe

- what containerized applications are running on which nodes

- available resources

- policies areound how those applications behave (restart, upgrade, fault-tolerance)

- Objects represents the cluster's desired state

kubectlis used to create/modify/delete the objects through api calls. alternatively, client-go can be used- Every k8s object has two field - the object spec is provided, it describes desired state. The object status describes the actual status of the object. k8s control plane actively manages actual state to match the desired state

- deployment is an object. spec can have no of replicas to make. it one fails, k8s replaces the instance.

- k8s api is used (either directly or via kubectl) to create an object. that API request must have some information as JSON in the request body. I provide the information to kubectl in a

.yamlfile. kubectl convert the info to JSON

kind:

metadata:

name:

labels:

app:

spec:

replicas:

template:

metadata:

name:

labels:

app:

spec:

containers:

- name:

image:

imagePullPolicy: IfNotPresent

ports:

- containerPort:

restartPolicy: Always

selector:

matchLabels:

app:

- Deployment struct

apiVersiondefines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. +optionalkindKind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. Cannot be updated. +optionalmetadataStandard object metadata. +optionalnameName must be unique within a namespace. Cannot be updated. +optionallabelsMap of string keys and values that can be used to organize and categorize (scope and select) objects. May match selectors of replication controllers and services. +optionaluidUID is the unique in time and space value for this object. It is typically generated by the server on successful creation of a resource and is not allowed to change on PUT operations. +optional

specSpecification of the desired behavior of the Deployment. +optionalreplicasNumber of desired pods. This is a pointer to distinguish between explicit zero and not specified. Defaults to 1. +optionaltemplateTemplate describes the pods that will be created.metadataStandard object's metadata. +optionalnamesamelabelssameappsame

specSpecification of the desired behavior of the pod. +optionalcontainersList of containers belonging to the pod. Containers cannot currently be added or removed. There must be at least one container in a Pod. Cannot be updated.nameName of the container specified as a DNS_LABEL. Each container in a pod must have a unique name (DNS_LABEL). Cannot be updated.imageDocker image name. This field is optional to allow higher level config management to default or override container images in workload controllers like Deployments and StatefulSets. +optional (QJenny)imagePullPolicyImage pull policy. One of Always, Never, IfNotPresent. Defaults to Always if :latest tag is specified, or IfNotPresent otherwise. Cannot be updated. +optional- Always means that kubelet always attempts to pull the latest image. Container will fail If the pull fails.

- Never means that kubelet never pulls an image, but only uses a local image. Container will fail if the image isn't present

- IfNotPresent means that kubelet pulls if the image isn't present on disk. Container will fail if the image isn't present and the pull fails.

portsList of ports to expose from the container. Exposing a port here gives the system additional information about the network connections a container uses, but is primarily informational. Not specifying a port here DOES NOT prevent that port from being exposed. Any port which is listening on the default "0.0.0.0" address inside a container will be accessible from the network. Cannot be updated. +optionalcontainerPortNumber of port to expose on the pod's IP address. This must be a valid port number, 0 < x < 65536.restartPolicyRestart policy for all containers within the pod. One of Always, OnFailure, Never. Default to Always. +optional

selectorLabel selector for pods. Existing ReplicaSets whose pods are selected by this will be the ones affected by this deployment. It must match the pod template's labels. (Selector selects the pods that will be controlled. if the matchLabels is a subset of a pod, then that pod will be selected and will be controlled.)matchLabelsmatchLabels is a map of {key,value} pairs. A single {key,value} in the matchLabels map is equivalent to an element of matchExpressions, whose key field is "key", the operator is "In", and the values array contains only "value". The requirements are ANDed. +optional. type: map[string]stringappis a key

- QJenny - what is +optional? (Nahid: +optional thakle user empty dite parbe, na dile nil hobe. +optional na thakle na dile empty hobe??? :/ )

apiVersion,kind,metadatais required.

- All objects in the Kubernetes REST API are unambiguously identified by a Name and a UID. For non-unique user-provided attributes, Kubernetes provides labels and annotations.

- name is included in the url

/api/v1/pods/<name> - max length 253. consists of lowercase alphanumeric, '-' and '.' (certain resources have more specific restrictions)

- A Kubernetes systems-generated string to uniquely identify objects.

- Every object created over the whole lifetime of a Kubernetes cluster has a distinct UID. It is intended to distinguish between historical occurrences of similar entities.

- Namespaces are intended for use in environments with many users spread across multiple teams, or projects. For clusters with a few to tens of users, you should not need to create or think about namespaces at all. Start using namespaces when you need the features they provide. Namespaces provide a scope for names. Names of resources need to be unique within a namespace, but not across namespaces.

- It is not necessary to use multiple namespaces just to separate slightly different resources, such as different versions of the same software: use labels to distinguish resources within the same namespace.

kubectl get namespacesto view namespaces in a clusterdefaultthe default namespace for objects with no other namespacekube-systemfor objects created by k8s systemkube-publicis crated automatically and readable by all users(including not authenticated). mostly reserved for cluster usage, in case some resources should be visible and readable publicly

kubectl create ns <new_namespace_namecreates a namespacekubectl --namespace=<non-default-namespace> run <deployment-name> --image=<image-name>kubectl get deploy --namespace=<new-namespace>if not mentioned, kubectl uses default namespaces. so you won't see the deployment created in previous comamnd if you don't mention this namespace namekubectl get deploy --all-namespacesshows all deployments under al namespaces- similar

--namespaceflag for all deloy/pod/service/container commands kubectl delete ns <new-namespace>deletes a namespacekubectl config viewshows the file~/.kube/config- clusters: here we have only minikube cluster, with its certificates and server. minikube cluster is running in that ip:port.

192.168.99.100(minikube ip) comes from here - users: kubectl communicates with k8s api, as a user(name: minikube (UJenny)). that user is defined here with its certificates and stuff

- context: configurations - we have one context. a context has cluster/user/namespace/name

- UJenny

- clusters: here we have only minikube cluster, with its certificates and server. minikube cluster is running in that ip:port.

kubectl config current-contextshows current-context field of the config filekubectl config set-context $(kubectl config current-context) --namespace=<ns>sets a ns as default, any changes you make/create objects will be done to this namespace- most k8s resources (pods, services, replication controllers etc.) are in some namespaces. low-level resources (nodes, persistentVolumes) are not in namespace.

kubectl api-resourcesshows all--namespaced=trueshows which are in some namespace--namespaced=falseshows which are not in a namespace

- When you create a Service, it creates a corresponding DNS entry. This entry is of the form ..svc.cluster.local, which means that if a container just uses , it will resolve to the service which is local to a namespace.

- This is useful for using the same configuration across multiple namespaces such as Development, Staging and Production. If you want to reach across namespaces, you need to use the fully qualified domain name (FQDN).

- to specify identifying attributes of objects - meaningful and relevant (but do not directly imply semantics to the core system). used to organize and seelect subsets of objects. can be attached at creation/added/modified.

- key can be anything, but have to be unique (within a object). e.g,

release,environment,tier,partition,track - Labels are key/value pairs. Valid label keys have two segments: an optional prefix and name, separated by a slash (/). The name segment is required and must be 63 characters or less, beginning and ending with an alphanumeric character ([a-z0-9A-Z]) with dashes (-), underscores (

_), dots (.), and alphanumerics between. The prefix is optional. If specified, the prefix must be a DNS subdomain: a series of DNS labels separated by dots (.), not longer than 253 characters in total, followed by a slash (/). If the prefix is omitted, the label Key is presumed to be private to the user. Automated system components (e.g. kube-scheduler, kube-controller-manager, kube-apiserver, kubectl, or other third-party automation) which add labels to end-user objects must specify a prefix. The kubernetes.io/ prefix is reserved for Kubernetes core components. - many objects can have same labels

- via a label selector, the client/user can identify a set of objects. the label selector is the core grouping primitive in k8s

- current, the API supports two types of selectors: equality-based and set-based. A label selector can be made of multiple requirements, which are comma-separated - acts as a logical AND(&&).

- An empty label selector selects every object in the collection

- A null label selector (which is only possible for optional selector fields) selects no objects (UJenny)

- The label selectors of two controllers must not overlap within a namespace, otherwise they will fight with each other.

- equality or inequality based requirements allow filtering by label keys and values. matching objects must satisfy ALL of the specified label constraints, though they may have additional labels as well.

- three kinds of operator are admitted

=,==,!=. first two are synonyms.environment = productionselects all resources with key equalenvironmentand value equal toproductiontier != frontendselects all resources with key equal totierand value distinct fromfrontendand all resources with no labels with thetierkey.environment=production, tier!=frontendselects resources inproductionexcludingfrontend- usually, pods are scheduled to nodes, based on various criteria. If we want to limit the nodes a pod can be assigned to

kind: Pod metadata: name: cuda-test spec: containers: - name: cuda-test image: "k8s.gcr.io/cuda-vector-add:v0.1" resources: limits: nvidia.com/gpu: 1 nodeSelector: accelerator: nvidia-tesla-p100 - Pod Struct

kind,metadatasamespec(podSpec) samecontainerssamename,imagesameresourcesCompute Resources required by this container. Cannot be updated. +optionallimitsLimits describes the maximum amount of compute resources allowed. +optional (this is of type ResourceList. ResourceList is a set of (resource name, quantity) pairs.)nvidia.com/gpuis resource name, whose quantity is1nodeSelectorNodeSelector is a selector which must be true for the pod to fit on a node. Selector which must match a node's labels for the pod to be scheduled on that node. +optionalaccelerator: nvidia-tesla-p100is a node label

- set-based label requirements allow one key with multiple values.

- three kinds of operators are supported:

in,notinandexists(only key needs to be mentioned) environment in (production, qa)selects all resources with key equal toenvironmentand value equal toproductionORqa. (of course OR, it cannot be AND, can it? a resource cannot have multiple values for one key)tier notin (frontend, backend)selects all resources with key equal totierand values other thanfrontendandbackendand all resources with no labels with thetierkeypartitionselects all resources including a label with keypartition, no values are checked!partitionselects all resources without the keypartition; no values are checked- comma acts like a AND

- so

partition, environment notin (qa)means the keypartitionhave to exist andenvironmenthave to be other thanqa(UJenny, will the resources with noenvironmentbe selected?)

- so

- Set-based requirements can be mixed with equality-based requirements. For example:

partition in (customerA, customerB), environment!=qa

- LIST and WATCH both are allowed to use using labels

- in URL:

- equality-based:

?labelSelector=environment%3Dproduction,tier%3Dfrontend - set-baed:

?labelSelector=environment+in+%28production%2Cqa%29%2Ctier+in+%28frontend%29

- equality-based:

- both selector style can be used to list or watch via REST client. e.g, targeting

apiserverwithkubectlkubectl get pods -l environment=production, tier=frontendkubectl get pods -l 'environment in (production), tier in (frontend)'

- set-based requirements are more expressive - they can implement OR operator

kubectl get pods -l 'environment in (production, qa)'selectsqaorproductionkubectl get pods -l 'environment, environment notin(frontend)'selects resources which hasenvironmentand it cannot befrontend(if i wroteenvironment notin(frontend)only, it would select the resources which doesn't haveenvironmentkey also)

servicesandreplicationcontrollersalso use label selectors to select other resources aspods- only equality-based requirements are supported (UJenny: is this restriction for deploy/pods too?)

- newer resources, such as

job,deployment,replicaset,daemon setsupport set-baed requirements

selector:

matchLabels:

component: redis

matchExpressions:

- {key: tier, operator: In, values: [cache]}

- {key: environment, operator: NotIn, values: [dev]}

matchExpressionsis a list of label selector requirements. The requirements are ANDed. +optionalkeyis the label key that the selector applies to. type: stringoperatorrepresents a key's relationship to a set of values. Valid operators are In, NotIn, Exists and DoesNotExist.valuesis an array of string values. If the operator is In or NotIn, the values array must be non-empty. If the operator is Exists or DoesNotExist, the values array must be empty. This array is replaced during a strategic merge patch. +optional (UJenny: merge patch?)

- All of the requirements, from both matchLabels and matchExpressions are ANDed together – they must all be satisfied in order to match.

- to attach arbitrary non-identifying metadata to objects. Clients such as tools and libraries can retrieve this metadata.

- labels are used to identify and select objects. annotations are not.

- can include characters not permitted by labels

"metadata": {

"annotations": {

"key1" : "value1",

"key2" : "value2"

}

}

- Instead of using annotations, you could store this type of information in an external database or directory, but that would make it much harder to produce shared client libraries and tools for deployment, management, introspection, and the like. (UJenny: examples are given)

- select k8s objects based on the value of one or more fields.

kubectl get pods --field-selector status.phase=Runningselects all pods for which the value ofstatus.phaseisRunningmetadata.name=my-service,metadata.namespace!=default,status.phase=Pending- using unsupported fields gives error

kubectl get ingress --field-selector foo.bar=bazgives errorError from server (BadRequest): Unable to find "ingresses" that match label selector "", field selector "foo.bar=baz": "foo.bar" is not a known field selector: only "metadata.name", "metadata.namespace"(UJenny: what the f is ingress) - supported operator are

=,==,!=(first two are same).kubectl get services --field-selector metadata.namespace!=default kubectl get pods --field-selector metadata.namespace=default,status.phase=RunningANDed- multiple resource types can also be selected

kubectl get statefulsets,services --field-selector metadata.namespace!=default

- multiple resource is also allowed for labels too:

kubectl get pod,deploy -l run=booklistkube2also works

- A Kubernetes object should be managed using only one technique. Mixing and matching techniques for the same object results in undefined behavior.

Management techniques Operates on Recommended environment Supported writers Learning curve

Imperative commands Live objects Development projects 1+ Lowest

Imperative object configuration Individual files Production projects 1 Moderate

Declarative object configuration Directories of files Production projects 1+ Highest

kubectl run nginx --image nginxkubectl create deployment nginx --image nginx- simple, easy to learn, single step

- doesn't record changes, doesn't provide template

kubectl create -f nginx.yamlkubectl delete -f <name>.yaml -f <name>.yamlkubectl replace -f <name>.yaml- Warning: The imperative replace command replaces the existing spec with the newly provided one, dropping all changes to the object missing from the configuration file. This approach should not be used with resource types whose specs are updated independently of the configuration file. Services of type LoadBalancer, for example, have their externalIPs field updated independently from the configuration by the cluster. (UJenny)

- compared with imperative commands:

- can be stored, provides template

- must learn, additional step writing YAML

- compated with declarative object config:

- simpler and easier to learn, more mature

- works best on files, not directories. updates to live objects must be reflected in config file or they will be lost in next replacement

- When using declarative object configuration, a user operates on object configuration files stored locally, however the user does not define the operations to be taken on the files. Create, update, and delete operations are automatically detected per-object by kubectl. This enables working on directories, where different operations might be needed for different objects. UJenny

- Note: Declarative object configuration retains changes made by other writers, even if the changes are not merged back to the object configuration file. This is possible by using the patch API operation to write only observed differences, instead of using the replace API operation to replace the entire object configuration. UJenny

kubectl diff -f configs/to see what changes are going to be madekubectl apply -f configs/process all object configuration files in the configs directorykubectl diff -R -f configs/,kubectl apply -R -f configs/recursively processs directories- changes made directly to live objects are retained, even if they are not merged. has better support for operating on directories and automatically detecting opeartion types (create, patch, delete) per-object

- harder to debug and understand results, partial updates using diffs create complex merge and patch operations.

- UJenny: the whole thing

run: create a new deploy to run containers in one or more podsexpose: create a new service to load balance traffic across podsautoscale: create a new autoscaler object to automatically horizontally scale a controller, such as a deployment.create: driven by object typecreate <objecttype> [<subtype>] <instancename>kubectl create service nodeport <name>

scalehorizontally scale a controller to add or removeannotateadd or remove an annotationlabeladd or remove a labelsetset/edit an aspect (env, image, resources, selector etc) of an objecteditdirectly edit the config filepatchdirectly modify specific fields of a live object by using a patch string (UJenny)deletedeletes an objectgetdescribelogsprints the stdout and stderr for a container running in a podkubectl create service clusterip <name> --clusterip="None" -o yaml --dry-run | kubectl set selector --local -f - 'environment=qa' -o yaml | kubectl create -f -createcommand cannot take every fields as flags. used create + set to do this (usingsetcommand to modify objects before creation)kubectl create service -o yaml --dry-runcommand creates the config file for the service, but prints it to stdout as YAML instread sending it to k8s API--dry-runif it is true, only print the object that would be sent, without sending it.

kubectl set selector --local -f - -o -yamlreads the config file from stdin, write the updated configuration to stdout as YAMLkubectl create -f -command creates the object using the config provided via stdin- UJenny:

--local

kubectl create service clusterip <name> --clusterip="None" -o yaml --dry-run > /tmp/srv.yaml

kubectl create --edit -f /tmp/srv.yaml

- `kubectl create service` creates the config for the service and saves it to `/tmp/srv.yaml`

- `kubectl create --edit` open the config file for editiog before creating

kubectl create -f <filename/url>creates an object from a config filekubectl replace -f <filename/url>- Warning: Updating objects with the replace command drops all parts of the spec not specified in the configuration file. This should not be used with objects whose specs are partially managed by the cluster, such as Services of type LoadBalancer, where the externalIPs field is managed independently from the configuration file. Independently managed fields must be copied to the configuration file to prevent replace from dropping them. UJenny

kubectl delete -f <filename/url>UJenny - how does this work?- ever if changes are made to live config file of the object -

delete <object-config>.yamlalso works too

- ever if changes are made to live config file of the object -

kubectl get -f <filename/url> -o yamlshow objects.-o yamlshows the yaml file- limitation: created a deployment from a yaml file. edited the

kubectl edit deploy <name>(note that, this is not the yaml file from which I created the object. this is calledlive configuration). thenkubectl replace -f <object-config>.yamlcreates the object from scratch. the edit is gone. works for every kind of object. kubectl applyif multiple writers are needed.kubectl create -f <url> --editedits the config file from the url, then create the object.kubectl get deploy <name> -o yaml --export > <filename>.yamlexports the live object config file to local config file- then remove the status field (interestingly, the status field is automatically after exporting, although

kubectl get deploy <name> -o yamlhas the status field) - then run

kubectl replace -f <filename>.yaml - this solves the

replaceproblem

- then remove the status field (interestingly, the status field is automatically after exporting, although

- Warning: Updating selectors on controllers is strongly discouraged. (UJenny: because it would affect a lot?)

- The recommended approach is to define a single, immutable PodTemplate label used only by the controller selector with no other semantic meaning. (significant labels. doesn't have to be changed again)

object config filedefines the config for k8s object.live object config/live configvalues an object, as observed by k8s cluster. this is typically stored inetcddeclaration config writer/declarative writera person or software component that makes updates to a live object.

- worker machine in k8s, previously knows as

minion - maybe vm or physical machine

- each node contains the services necessary to run pods

- the services on a node include docker, kubelet, kube-proxy

- a node's status contains - addresses, condition, capacity, info

- Node is a top-level resource in the k8s REST API.

- usage of these fields depends on your cloud provider or bare metal config

HostNamethe hostname as reported by the node's kernel. can be overridden via the kubelet--hostname-overrideparameterExternalIPavailable from outside the clusterInternalIPwithin the cluster

- describes the status of all running nodes

OutOfDiskTrueif there is insufficient free space on the node for adding new pods, otherwiseFalseReadyTrueif the node is healthy and ready to accept pods,Falseif the node is not healthy and is not accepting pods andUnknownif the node controller has not heard from the node in the lastnode-monitor-grace-period(default is 40 seconds)MemoryPressureTrueif pressure exists on the node memory - that is, if the node memory is low; otherwiseFalsePIDPressureTrueif pressure exists on the processes - that is, if there are too many processes on the node, otherwiseFalseDiskPressureTrueif pressure exists on the disk size - that is, if the disk capacity is low; otherwiseFalseNetworkUnavailableTrueif the network for the node is not correctly configured, otherwiseFalse

"conditions": [

{

"type": "Ready",

"status": "True"

}

]

- if the status of the

Readycondition remainsUnknownorFalsefor longer thanpod-eviction-timeout(default 5m), an argument is passed tokube-controller-managerand all the pods on the node are scheduled for deletion by Node Controller. if apiserver cannot communicate with kubelet on the node, the deletion decision cannot happen until the communication is established again. in the meantime, the pods on the node continues to run, inTerminatingorUnknownstate. - prior to version 1.5, node controller force deletes the pods from the apiserver. from 1.5, if k8s cannot deduce if the node has permanently left the cluster, admin needs to delete the node by hand. then all pods will be deleted with the node.

- in version 1.12,

TaintNodesByConditionfeature is promoted to beta,so node lifecycle controller automatically creates taints that represent conditions. Similarly the scheduler ignores conditions when considering a Node; instead it looks at the Node’s taints and a Pod’s tolerations. users can choose between the old scheduling model and a new, more flexible scheduling model. A Pod that does not have any tolerations gets scheduled according to the old model. But a Pod that tolerates the taints of a particular Node can be scheduled on that Node. Enabling this feature creates a small delay between the time when a condition is observed and when a taint is created. This delay is usually less than one second, but it can increase the number of Pods that are successfully scheduled but rejected by the kubelet. (UJenny)

- describes the resources available on the node: cpu, memory, max no of pods that can be scheduled

- general information about the node, such as kernel version, k8s (kubelet, kube-proxy) version, docker version, os name. these info are gathered by kubelet

- unlike pods and services, a node is not inherently created by k8s. it is created by cloud providers, e.g, google compute engine or it exists in your pool of physical or vm. so when k8s creates a node, it creates an object that represents the node. then k8s checks whether the node is valid or not.

{

"kind": "Node",

"apiVersion": "v1",

"metadata": {

"name": "10.240.79.157",

"labels": {

"name": "my-first-k8s-node"

}

}

}

- k8s creates a node object internally (the representation) and validates the node by health checking based on `metadata.name`. if it is valid, it is eligible to run a pod. otherwise, it is ignored for any cluster until it becomes valid. k8s keeps the object for the invalid node and keeps checking. to stop this process, you have to explicitly delete this node.

- there are 3 components that interact with the k8s node interface: node controller, kubelet, kubectl

- k8s master component which manages varioud aspects of nodes

- first role is assigning a CIDR block to a node when it is registered (if CIDR assignment is turned on) (UJenny)

- second is keeping the node controller's internal list of nodes up to date with the cloud provider's list of available machines. when a node is unhealthy, the node controller asks the cloud provider if the vm for that node is still available. if not, the node controller deletes the node from its list of nodes.

- third is monitoring the nodes' health. the node controller is responsible for updating the NodeReady condition of NodeStatus to ConditionUnknown when a node becomes unreachable and then later evicting all the pods from the node if the node continues to be unreachable(

--node-monitor-grace-periodis 40s andpod-eviction-timeoutis 5m by defautl). the node controller checks the state of each node every--node-monitor-periodseconds (default 5s) (QJenny where are these flags???) - prior to 1.13, NodeStatus is the heartbeat of a node. from 1.13, node lease feature is introduced. when this feature is enabled, each node has an associated

Leaseobject inkube-node-leasenamespace. both NodeStatus and node lease are treated as heartbeats. Node leases are updated frequently and NodeStatus is reported from node to master only when there is some change or some time(default 1 minute) has passed, which is longer than default timeout of 40 seconds for unreachable nodes. Since node lease is much more lightweight than NodeStatus, this feature makes node heartbeat cheaper. - Node controller limits the eviction rate to

--node-eviction-rate(default 0.1) per second, meaning it won't evict pods from more than 1 node per 10 seconds.- If your cluster spans multiple cloud provider availability zones (otherwise its one zone), node eviction behavior changes when a node in a given availability becomes unhealthy.

- Node controller checks the percentage of unhealthy nodes (

NodeReadyisFalseorConditionUnknown). If the fraction is at least--unhealthy-zone-threshold(default 0.55) then the eviction rate is reduced - if the cluster is small (less than or equal to--large-cluster-size-threshold, default 50 nodes), then evictions are stopped, otherwise the eviction rate is reduced to--secondary-node-eviction-rate(default 0.01) per second. - This is done because one availability zone might become partitioned from the master while the others remain connected.

- Key reason for spreading the nodes across availability zone is that workload can be shifted to healthy zones when one entire zone goes down.

- Therefore if all nodes in a zone are unhealthy then node controller evicts at the normal rate

--node-eviction-rate - Corner case is, if all zones are completely unhealthy, node controller thinks there's some problem with master connectivity and stops all evictions until some connectivity is restored.

- Starting in Kubernetes 1.6, the NodeController is also responsible for evicting pods that are running on nodes with NoExecute taints, when the pods do not tolerate the taints. Additionally, as an alpha feature that is disabled by default, the NodeController is responsible for adding taints corresponding to node problems like node unreachable or not ready. See this documentation for details about NoExecute taints and the alpha feature. Starting in version 1.8, the node controller can be made responsible for creating taints that represent Node conditions. This is an alpha feature of version 1.8. (UJenny)

- When the kubelet flag

--register-nodeis true (the default), the kubelet will attempt to register itself with the API server. --kubeconfigpath to credentials to authenticate itself to the apiserver--cloud-providerhow to talk to a cloud provider to read metadata about itself--register-nodeautomatically register with the API server--register-with-taintsregister the node with the given list of taints (comma separated =:) No-op ifregister-nodeis false--node-ipip address of the node--node-labelslabels to add when registering the node in the cluster--node-status-update-frequencyspecifies how often kubelet posts node status to master

Manual Node Administration (UJenny)

- Number of cpus and amount of memory

- Normally nodes register themselves and report their capacity when creating node object

- K8s scheduler ensures that there are enough resources for all thepods on a node. The sum of the requests of containers on the node must be no greater than the node capacity. (It includes all containers started by the kubelet, not the ones started directly by dockers nor any process running outside of the containers)

- If you want to explicitly reserve resources for non-pod processes, you can create a placeholder pod.

apiVersion: v1

kind: Pod

metadata:

name: resource-reserver

spec:

containers:

- name: sleep-forever

image: k8s.gcr.io/pause:0.8.0

resources:

requests:

cpu: 100m

memory: 100Mi

- set the cpu and memory values to the amount of resources you want to reserve. Place the file in the manifest directory (`--config=DIR` flag of kubelet). do this on every kubelet you want to reserve resources. QJenny

- communication paths between the master (really the apiserver) and the k8s cluster.

- to allow users to customize their installatin to run the cluster on an untrusted network or on fully public IPs on a cloud provider

- all communicatin paths from the cluster to master terminate at the apiserver (

kube-apiserver, which exposes k8s api. it is designed to expose remote services) - in a typical deployment, the apiserver is configured to listen for remote connections on a secure https port (443) with one or more forms of client authentication enable, which is necessary if annonymous requests of service account tokens are allowed (UJenny)

- nodes should be provisioned with the public root certificate for the cluster such that they can connect securely to the apiserver along with valid client credentials. for example, on a default GKE(Google Kubernetes Engine) deployment, the client credentials provided to the kubelet are in the form of a client certificate.

- pods that wish to connect to the apiserver can do so securely by taking a 'service account' so that k8s will automatically inject the public root certificate and a valid bearer token into the pod when it is instactiated.

- the

kubernetesservice (in all namespaces) is configured with a virtual IP address that is redirected (viakube-proxy) to the https endpoint on the apiserver - the master components also communicate with the cluster apiserver over the secure port

- so, the default operating mode for connections from the cluster (nodes and pods running on the nodes) to the master is secured by default and can run over untrusted and/or public network

- two primary communication paths from the master(apiserver) to the cluster

- from the apiserver to the kubelet process

- from the apiserver to any node, pod or service through the apiserver's proxy functionality

- used for

- fetching logs for pods

- attaching (through kubectl) tp running pods

- providing the kubelet's port-forwarding functionality

- these connecions terminate at the kubelet's https endpoint.

- by default, apiserver doesn't verify the kubelet's serving certificate, which makes the connection subject to man-in-the-middle attacks, and unsafe to run over untrusted and/or public networks

- to verify this connection, use the

--kubelet-certificate-authorityflag to provide the apiserver with a root certificate bundle to use to verify the kubelet's serving certificate - if that is not possible, use

SSH tunnelingbetweent the apiserver and kubelet if required to avoid connecting over an untrusted or public network - finally, kubelet authentication and/or authorization shouldb be enabled to secure the kubelet API

- these are default to plain http connections and are therefore neither authenticated nor encrypted.

- they can be run over a secure https connection by prefixing

https:to the node, pod or service name in the API URL, but they will not validate the certificate provided by the https endpoint nor provide client credentials - so while the connection will be encrypted, it will not provide any guarantees of integrity. - these connections are not currently safe to run over untrusted and/or public networks.

- this concept was originally created to allow cloud specific vendor code and k8s core to evolve independent of one another. (UJenny - vendor code)

- it runs alongside other master components such as the k8s controller manager, the api server, and scheduler. it can also be started as k8s addon, in which case it runs on top of k8s

- CCM's design is based on a plugin mechanism that allows new cloud providers to integrate with k8s by using plugins

- there are plans for migrating cloud providers from the old model to the new ccm model

- here k8s and cloud provider are integrated with 3 different components - kubelet, k8s controller manager, k8s api server

- here, single point of integration with cloud

- CCM breaks away cloud dependent controller loops from kcm - Node controller, volume controller, router controller, service controller

- from 1.9, due to the complexity involved and due to the existing efforts to abstract away vendor specific volume logic, volume controller is not moved to ccm. instead ccm runs another controller called PersistentVolumeLabels controller. this controller is responsible for setting the zone and region labels on PersistentVolumes created in GCP and AWS clouds (UJenny is volumed controller still in or not? if in, who controls it?)

- the original plan to support volumes using CCM was to use Flex volumes to support pluggable volumes. However, a competing effort known as CSI is being planned to replace Flex. Considering these dynamics, we decided to have an intermediate stop gap measure until CSI becomes ready (UJenny)

- majority of ccm's functions are derived from the kcm

node controlleris responsible for initializing a node by obtaining information about the nodes running in the cluster from the cloud provider. does the following functions- intialize a node with cloud specific zone/region labels

- initialize a node with cloud specific instance details, e. g, type and size

- obtain the node's network addresses and hostname

- if a node becomes unresponsive, check the cloud to see if the node has been deleted form the cloud. if deleted, delete the k8s node object

route controlleris responsible for configuring routes in the cloud appropriately so that containers on different nodes can communicate with each other. route controller is only applicable for Google Compute Engine clusters (QJenny nodes can communicate with each other??? why and how do they do that?)service controlleris responsible for listening to service create, update and delete events. Based on the current state of services, it configures cloud load balancers (such as ELB(Elastic Load Balancing) or Google LB) to reflect the state of the services in k8s.PersistentVolumeLabels controllerapplies labels on AWS EBS(Amazon Web Services, Elastic Block Store)/GCE PD(Google Compute Engine Persistent Disk) volumes when they are created. removes the need for users to manually set the labels on these volumes.- these labels are essential for the scheduling of pods as these volumes are constrained to work only within the region/zone that they are in. (pods need to be attached to volumes only in same region/zone - because otherwise they would be connected to remote network connection.) (shudipta said if they are in different network - they should use NFS (network file system) volume type)

- this controller was created for ccm. it was done to move the PV labelling logic in the k8s api server to the CCM (before it was an admission controller). it doesn't run on the KCM.

- node controller contains the cloud-dependent functionality of the kubelet. before CCM, the kubelet was responsible for initializing a node with cloud-specific details such as IP addresses, region/zone labels and instance type information

- now, this initialization operation is moved to CCM

- kubelet initializes a node without cloud-specific information.

- and adds a taint to the newly created node that makes the node unschedulable until the CCM initializes the node with cloud-specific information. kubelet then removes the taint. (UJenny - again. what's taint?)

- the PersistentVolumeLabels controller moves the cloud-dependent functionality of the K8s API server to CCM

- CCM uses Go interfaces to allow implementations from any cloud to be plugged in.

- it uses the CloudProvider Interface defined here (UJenny)

- the implementations of four shared controllers above and shared cloudprovider interface (and some other things) will stayRather than specifying the current desired state of all replicas, pod templates are like cookie cutters. Once a cookie has been cut, the cookie has no relationship to the cutter. There is no “quantum entanglement”. Subsequent changes to the template or even switching to a new template has no direct effect on the pods already created. Similarly, pods created by a replication controller may subsequently be updated directly. This is in deliberate contrast to pods, which do specify the current desired state of all containers belonging to the pod. This approach radically simplifies system semantics and increases the flexibility of the primitive.

- in k8s core.

- implementation specific to cloud providers will be built outside of the core and implement interfaces defined in the core

- node controller only works with node objects. requires full access to

Get,List,Create,Update,Patch,Watch,Delete(v1/Node) - route controller listens to Node object creation and configures routes appropriately. requires

Getaccess to Node objects (v1/Node) - service controller listens to service object create, update and delete events and then configures endpoints for those services appropriately. (v1/Service)

- To access services, it requires

ListandWatchaccess. - To update services, it requires

PatchandUpdateaccess. - To set up endpoints for the services, it requires access to

Create,List,Get,Watch,Update

- To access services, it requires

- PersistentVolumeLabels controller listens on PersistentVolume (PV) create events and then updates them. requires access to get and update PVs through v1/PersistentVolume:

Get,List,Watch,Updateaccess - others: the implementation of the core CCM requires access to create events and to ensure secure operation, it requires access to create ServiceAccounts through v1/Event:

Create,Patch,Updateand v1/ServiceAccount:Create - kind: ClusterRole UJenny

- cloud providers implement CCM to integrate with k8s - so that cluster can be hosted on these cloud providers? (UJenny)

Containers (UJenny)

- default pull policy is

IfNotPresentwhich causes the kubelet to skip pulling. - to always force a pull, one of these can be done

- set the imagePullPolicy to

Always - omit the imagePullPolicy and use

:latestas tag (not best practice) - omit imagePullPolicy and the tag for the image (UJenny - omit both? test)

- enable the

AlwaysPullImagesadmission controller (UJenny)

- set the imagePullPolicy to

- smallest deployable object

- a pod represents a running process on your cluster

- a pod encapsulates

- one or multiple containers

- storage resources

- a unique network IP

- options that govern how the container(s) should run.

- two ways:

- pods that run a single container: most common use case, a pod just wraps around a container. kubernetes manages the pods rather than the containers

- pods that run multiple containers that need to work together: tightly coupled containers and need to share resources. these co-located containers might form a single cohesive unit of service - one container serving files from a shared volume to the public, another container refreshes or updates those files.

- replication: horizontal scaling (multiple instances)

- the containers in a pod are automatically co-located and co-scheduled on the same physical or virtual machine in the cluster.

- they can share resources and dependencies, communicate with one another, and coordinate when and how they are terminated.

- it's a advanced use case. only use when they are tightly coupled. for example, one container acts as a web server for files in a shared volume and another one updates those files from a remote source.

- pods provide two kinds of shared resources for their containers: networking and storage.

- networking: each container in a pod shares the network namespace, including the IP address and network ports. they communicate with one another using

localhost. (QJenny how???) - when containers in a pod communicate with entities outside the Pod, they must coordinate how they use the shared network resources (such as ports)

- storage: a pod can specify a set of shared storage volumes. All containers in the pod can access the shared volumes. Volumes also allow persistent data in a Pod to survive in case one of the containers within needs to be restarted

- networking: each container in a pod shares the network namespace, including the IP address and network ports. they communicate with one another using

- you'll rarely create individual pods directly

- pods can be created directly by me or indirectly by Controller

- pod remains in the scheduled Node until the process is terminated, pod object is deleted, pod is evicted for lack of resources or Node fails

- the pod itself doesn't run, but it is an environment the containers run in and persists until it is deleted

- pods don't self-heal. if a pod is scheduled to a node that fails or if scheduling operation itself fails, the pod is deleted. and pods don't survive evictiion due to lack of resources or Node maintenance

- k8s uses a higher-level abstraction, called a controller, that handles the work of managing the relatively disposable Pod instances.

- although it is possible to use Pod directly, it's far more common to manage pods using a Controller

- A controller can create and manage multiple pods, handling replication and rollout and providing self-healing capabilities at cluster scope. for example, if a Node fails, the controller might automatically replace the Pod by scheduling an identical replacement on a different Node

- some examples of controller - deployment, StatefulSet, DaemonSet

- pod templates are pod specifications which are included in other objects such as Replication Controllers, Jobs, DaemonSets.

- controllers use pod templates to make actual pods.

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh', '-c', 'echo Hello Kubernetes! && sleep 3600']

- rather than specifying the current desired state of all replicas, pod templates are like cookie cutters. once a cookie has been cut, the cookie has no relationship to the cutter.

- subsequent changes to the template or even switching to a new template has no direct effect on the pods already created.

- changes to the template doesn't affect the pods already created

- Similarly, pods created by a replication controller may subsequently be updated directly. this is in deliberate contrast to pods, which do specify the current desired state of all containers belonging to the pod. (after creating pods with controller, the pods can be updated separately with

kubectl edit pod <name>. it will affect only the specific pod) - a pod models an application-specific "logical host". [before: same physical/virtual machine] = [now: same logical host]

- the shared context of a pod is a set of Linux namespaces, cgroups, and other aspects of isolation (same things that isolate Docker container)

cgroups(control groups) is a Linux kernel feature that limits, accounts for and isolates the resource usage(CPU, memory, disk I/O, network etc) of a collection of processes.

- within a pod's context, the individual applications may have further sub-isolations applied.

- as mentioned before, containers in same pod share an IP address and port space and can find each other via

localhost. they can also communicate using standard inter-process communications likeSystemV semaphoresorPOSIXshared memory. (UJenny: what?) - containers in different pods have distinct IP addresses and cannot communicate by IPC (interprocess communication) without special configuration (UJenny)

- containers in different pods communicate usually with each other via pod ip addresses.

- applications within a pod also have access to shared volumes, which are defined as part of a pod and are made available to be mounted into each applicaion's filesystem.

- pods are of "short life". after pods are created, they are assigned a unique ID (UID), scheduled to nodes where they remain until termination or deletion.

- a pod is defined by UID

- If a node dies, pods are scheduled for deletion after a timeout period. they are not rescheduled, instead it is replaced by an identical pod, if desired with same name but with a new UID

- when something is said to have same lifetime as a pod, such as a volume, it exists as long as that specific pod (with that UID) exists. if the pod is deleted, volume is also destroyed. even if an identical pod is replaced, volume will be created anew.

- pods are unit of deployment, horizontal scaling, replication

- colocation (coscheduling), shared fate(e.g, termination), coordinated replication, resource sharing, dependency management are handled automatically in a pod

- applications in a pod must decide which one will use which port (one container is exposed in one port)

- each pod has an IP address in a flat shared networking space that can communicate with other physical machines and pods across the network

- the hostname is set to the pod's name for the application containers within the pod

- volumes enable data to survive container restarts and to be shared among the applicatiions within the pods

- uses of pods UJenny

- individual pods are not intended to run multiple instances of the same application

- why not just run multiple programs in a single container?

- transparency: helps the infrastructure to manage them, for example, process management and resoruce monitoring.

- decoupling software dependencies: they maybe be versioned, rebuilt, redeployed independently. k8s may even support live updates of individual containers someday.

- ease of use. users don't need to run their process managers (because infrastructure manages them?)

- efficiency: infrastructure takes on more responsibility, containers can be light weight

- Why not support affinity-based co-scheduling of containers? That approach would provide co-location, but would not provide most of the benefits of pods, such as resource sharing, IPC, guaranteed fate sharing, and simplified management. UJenny

- controllers (such as deployement) provides self-healing, replication, rollout management wiht a cluster scope, that's why pods should use controllers, even for singletons

- pod is exposed as a primitive in order to facilitate : UJenny

- as pods represent running processes on nodes in the cluster, it is important to allow those processes to gracefully terminate instead of being killed with a KILL signal

- when a user requests for deletion of a pod

- the system records the intended grace period before the pod is forcefully killed

- a TERM signal is sent to main processes of containers of the pod

- once the grace period is over, KILL signal is sent to those processes (of containers)

- then pod is deleted from API server

- if kubelet or container manager is restarted while waiting for processes to terminate, termination will be tried again with full grace period.

- an example flow -

- user sends commands to delete a pod with grace period of 30s

- the pod in the API server is updated with the last-alive-time and grace period

kubectl get podswill showTerminatingfor the pod. when the kubelet sees theTerminatingmark, it begins the process (the following subpoints happen simulaneously with this step)- if the pod has defined a

preStop hook, it is invoked inside of the pod. preStopis called immediately before a container is terminated. it must complete before the call to delete the container can be sent. of typeHandler- if the

preStophook is still running after grace period expires, 2nd step again - API server is updated with 2 second of grace period again. - the processes are sent TERM signal

- pod is removed from endpoints list for service, are no longer part of the set of running pods for replication controllers

- pods that shutdown slowly cannot continue to serve traffic as load balancers (like the service proxy) remove them from their rotations (UJenny)

- when the grace perios expires, running processes are killed with SIGKILL

- kubelet will finish deleting the pod on the API server by setting grace period to 0s (immediate deletion).

- default grace period is 30s.

kubectl deletehas--grace-period=<second>- to force delete set

--grace-periodto 0 along with--force - force deletion of a pod: doesn't wait for kubelet confirmation from the node. the pod is deleted from the apiserver immediately and a new pod is created with the same name. on the node, pods that are set to terminate will be given a small grace period before being killed.

- using

privilegedflag on theSecurityContextof the container (not pod) spec enables priviledge mode. it allows the containers to use linux capabilities like manipulating the network stack and accessing devices. all processes in a container get same privileges usually. with privileged mode, it is easier to write network and volume plugins as separate pods that don't need to be compiled in kubelet - privileged mode is enabled from k8s v1.1. If the master is v1.1 or higher, but node is below, privileged pods will be created (accepted in apiserver) but will be in pending state. if master is below v1.1, pods won't be created.

- pod's status field is a

PodStatusobject, which has aphasefield. (current condition of the pod)PendingThe Pod has been accepted by the Kubernetes system, but one or more of the Container images has not been created. This includes time before being scheduled as well as time spent downloading images over the network, which could take a while.RunningThe Pod has been bound to a node, and all of the Containers have been created. At least one Container is still running, or is in the process of starting or restarting.SucceededAll Containers in the Pod have terminated in success, and will not be restarted.FailedAll Containers in the Pod have terminated, and at least one Container has terminated in failure. That is, the Container either exited with non-zero status or was terminated by the system.UnknownFor some reason the state of the Pod could not be obtained, typically due to an error in communicating with the host of the Pod.

- PodStatus has a

PodConditionarray (current service state of the pod)- Details UJenny

conditions: - lastProbeTime: null lastTransitionTime: 2018-12-11T08:54:48Z status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: 2018-12-12T04:01:42Z status: "True" type: Ready - lastProbeTime: null lastTransitionTime: 2018-12-11T08:54:48Z status: "True" type: PodScheduled

- A probe is a diagnostic performed periodically by kubelet on a container. container struct has two member of this type (liveness and readiness)

- to perform a diagnostic, kubelet calls a Handler

- Probe struct - Probe describes a health check to be performed against a container to determine whether it is alive or ready to receive traffic.

HandlerThe action taken to determine the health of a container. 3 types of handlers-ExecActionExecutes a specified command inside the Container. The diagnostic is considered successful if the command exits with a status code of 0. takes a command as an element of this structTCPSocketActionPerforms a TCP check against the Container’s IP address on a specified port. The diagnostic is considered successful if the port is open. takes the port as element. takes host name to connect to, default to pod ip. (UJenny - host name???)HTTPGetActionPerforms an HTTP Get request against the Container’s IP address on a specified port and path. The diagnostic is considered successful if the response has a status code greater than or equal to 200 and less than 400. takes path, port, host (default to pod ip) as element of this structInitialDelaySecondsof typeint32- Number of seconds after the container has started before liveness probes are initiated.

- each probe has one of three results:

- success: the container passed the diagnostic

- failure: failed the diagnostic

- unknown: the diagnostic itself failed, so no action should be taken

- the kubelet can optionally perform and react to two kinds of probes on running containers: