Learn how to create reliable ML systems by testing code, data and models.

👉 This repository contains the interactive notebook that complements the testing lesson, which is a part of the MLOps course. If you haven't already, be sure to check out the lesson because all the concepts are covered extensively and tied to software engineering best practices for building ML systems.

Tools such as pytest allow us to test the functions that interact with our data but not the validity of the data itself. We're going to use the great expectations library to create expectations as to what our data should look like in a standardized way.

!pip install great-expectations==0.15.15 -qimport great_expectations as ge

import json

import pandas as pd

from urllib.request import urlopen# Load labeled projects

projects = pd.read_csv("https://raw.githubusercontent.com/GokuMohandas/Made-With-ML/main/datasets/projects.csv")

tags = pd.read_csv("https://raw.githubusercontent.com/GokuMohandas/Made-With-ML/main/datasets/tags.csv")

df = ge.dataset.PandasDataset(pd.merge(projects, tags, on="id"))

print (f"{len(df)} projects")

df.head(5)| id | created_on | title | description | tag | |

|---|---|---|---|---|---|

| 0 | 6 | 2020-02-20 06:43:18 | Comparison between YOLO and RCNN on real world... | Bringing theory to experiment is cool. We can ... | computer-vision |

| 1 | 7 | 2020-02-20 06:47:21 | Show, Infer & Tell: Contextual Inference for C... | The beauty of the work lies in the way it arch... | computer-vision |

| 2 | 9 | 2020-02-24 16:24:45 | Awesome Graph Classification | A collection of important graph embedding, cla... | graph-learning |

| 3 | 15 | 2020-02-28 23:55:26 | Awesome Monte Carlo Tree Search | A curated list of Monte Carlo tree search papers... | reinforcement-learning |

| 4 | 19 | 2020-03-03 13:54:31 | Diffusion to Vector | Reference implementation of Diffusion2Vec (Com... | graph-learning |

When it comes to creating expectations as to what our data should look like, we want to think about our entire dataset and all the features (columns) within it.

# Presence of specific features

df.expect_table_columns_to_match_ordered_list(

column_list=["id", "created_on", "title", "description", "tag"]

)# Unique combinations of features (detect data leaks!)

df.expect_compound_columns_to_be_unique(column_list=["title", "description"])# Missing values

df.expect_column_values_to_not_be_null(column="tag")# Unique values

df.expect_column_values_to_be_unique(column="id")# Type adherence

df.expect_column_values_to_be_of_type(column="title", type_="str")# List (categorical) / range (continuous) of allowed values

tags = ["computer-vision", "graph-learning", "reinforcement-learning",

"natural-language-processing", "mlops", "time-series"]

df.expect_column_values_to_be_in_set(column="tag", value_set=tags)There are just a few of the different expectations that we can create. Be sure to explore all the expectations, including custom expectations. Here are some other popular expectations that don't pertain to our specific dataset but are widely applicable:

- feature value relationships with other feature values →

expect_column_pair_values_a_to_be_greater_than_b - row count (exact or range) of samples →

expect_table_row_count_to_be_between - value statistics (mean, std, median, max, min, sum, etc.) →

expect_column_mean_to_be_between

The advantage of using a library such as great expectations, as opposed to isolated assert statements is that we can:

- reduce redundant efforts for creating tests across data modalities

- automatically create testing checkpoints to execute as our dataset grows

- automatically generate documentation on expectations and report on runs

- easily connect with backend data sources such as local file systems, S3, databases, etc.

# Run all tests on our DataFrame at once

expectation_suite = df.get_expectation_suite(discard_failed_expectations=False)

df.validate(expectation_suite=expectation_suite, only_return_failures=True)"success": true,

"evaluation_parameters": {},

"results": [],

"statistics": {

"evaluated_expectations": 6,

"successful_expectations": 6,

"unsuccessful_expectations": 0,

"success_percent": 100.0

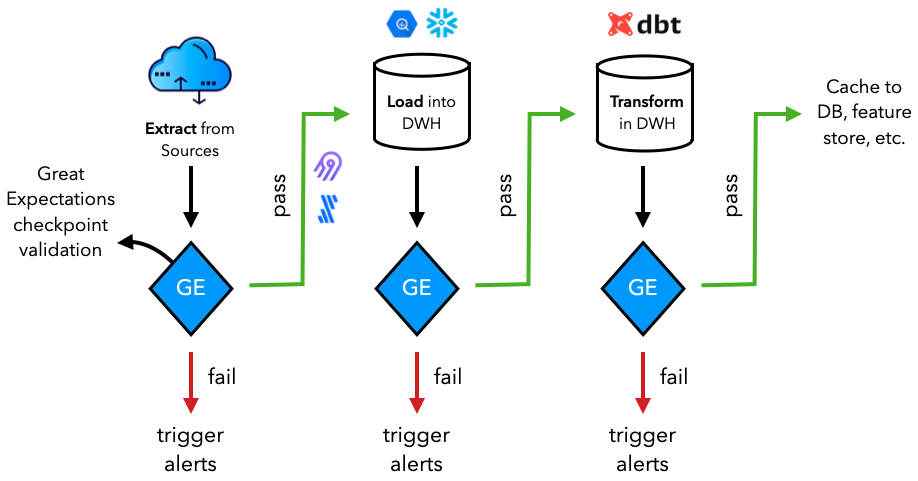

}Many of these expectations will be executed when the data is extracted, loaded and transformed during our DataOps workflows. Typically, the data will be extracted from a source (database, API, etc.) and loaded into a data system (ex. data warehouse) before being transformed there (ex. using dbt) for downstream applications. Throughout these tasks, Great Expectations checkpoint validations can be run to ensure the validity of the data and the changes applied to it.

Once we've tested our data, we can use it for downstream applications such as training machine learning models. It's important that we also test these model artifacts to ensure reliable behavior in our application.

Unlike traditional software, ML models can run to completion without throwing any exceptions / errors but can produce incorrect systems. We want to catch errors quickly to save on time and compute.

- Check shapes and values of model output

assert model(inputs).shape == torch.Size([len(inputs), num_classes])- Check for decreasing loss after one batch of training

assert epoch_loss < prev_epoch_loss- Overfit on a batch

accuracy = train(model, inputs=batches[0])

assert accuracy == pytest.approx(0.95, abs=0.05) # 0.95 ± 0.05- Train to completion (tests early stopping, saving, etc.)

train(model)

assert learning_rate >= min_learning_rate

assert artifacts- On different devices

assert train(model, device=torch.device("cpu"))

assert train(model, device=torch.device("cuda"))Behavioral testing is the process of testing input data and expected outputs while treating the model as a black box (model agnostic evaluation). A landmark paper on this topic is Beyond Accuracy: Behavioral Testing of NLP Models with CheckList which breaks down behavioral testing into three types of tests:

invariance: Changes should not affect outputs.

# INVariance via verb injection (changes should not affect outputs)

tokens = ["revolutionized", "disrupted"]

texts = [f"Transformers applied to NLP have {token} the ML field." for token in tokens]

predict.predict(texts=texts, artifacts=artifacts)['natural-language-processing', 'natural-language-processing']

directional: Change should affect outputs.

# DIRectional expectations (changes with known outputs)

tokens = ["text classification", "image classification"]

texts = [f"ML applied to {token}." for token in tokens]

predict.predict(texts=texts, artifacts=artifacts)['natural-language-processing', 'computer-vision']

minimum functionality: Simple combination of inputs and expected outputs.

# Minimum Functionality Tests (simple input/output pairs)

tokens = ["natural language processing", "mlops"]

texts = [f"{token} is the next big wave in machine learning." for token in tokens]

predict.predict(texts=texts, artifacts=artifacts)['natural-language-processing', 'mlops']

Behavioral testing can be extended to adversarial testing where we test to see how the model would perform under edge cases, bias, noise, etc.

texts = [

"CNNs for text classification.", # CNNs are typically seen in computer-vision projects

"This should not produce any relevant topics." # should predict `other` label

]

predict.predict(texts=texts, artifacts=artifacts)['natural-language-processing', 'other']

When our model is deployed, most users will be using it for inference (directly / indirectly), so it's very important that we test all aspects of it.

This is the first time we're not loading our components from in-memory so we want to ensure that the required artifacts (model weights, encoders, config, etc.) are all able to be loaded.

artifacts = main.load_artifacts(run_id=run_id)

assert isinstance(artifacts["label_encoder"], data.LabelEncoder)

...Once we have our artifacts loaded, we're readying to test our prediction pipelines. We should test samples with just one input, as well as a batch of inputs (ex. padding can have unintended consequences sometimes).

# test our API call directly

data = {

"texts": [

{"text": "Transfer learning with transformers for text classification."},

{"text": "Generative adversarial networks in both PyTorch and TensorFlow."},

]

}

response = client.post("/predict", json=data)

assert response.status_code == HTTPStatus.OK

assert response.request.method == "POST"

assert len(response.json()["data"]["predictions"]) == len(data["texts"])

...While these are the foundational concepts for testing ML systems, there are a lot of software best practices for testing that we cannot show in an isolated repository. Learn a lot more about comprehensively testing code, data and models for ML systems in our testing lesson.