Yesterday I saw a fun demo by Nayuki using simulated annealing to reconstruct photographs whose columns have been shuffled.

For example, the photograph Blue Hour in Paris (CC licensed by Falcon® Photography):

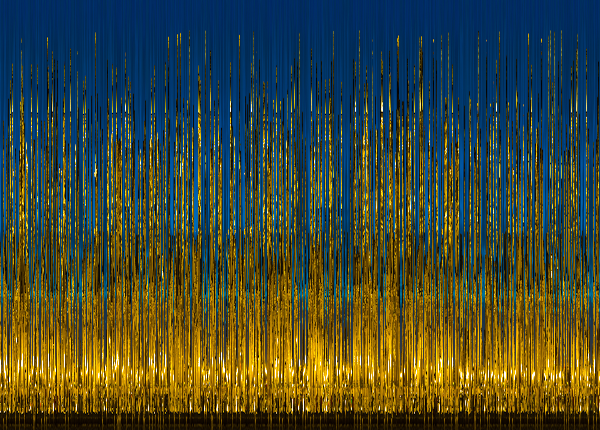

is shuffled to produce:

and the simulated annealing algorithm (starting temperature 4000, 1 billion iterations) reconstructs this:

Subsequently Sangaline showed that the images can be reconstructed faster and more effectively using a simple greedy algorithm to pick the most-similar column at each step. The greedy algorithm produces this:

which is quite close to the original, though you can see some misplaced columns in the sky at the right.

Our task is to piece the columns of pixels together so that, over all, adjacent columns are as similar as possible. Think of the columns as being nodes in a weighted graph, with the edge-weight between two columns being a dissimilarity measure. Then we are looking for a Hamiltonian path of minimum weight in the graph. So it is an instance of the Travelling Salesman Problem.

(A small technical note: since we want a Hamiltonian path rather than a Hamiltonian cycle, we add a dummy node to the graph that has weight-0 edges to all the other nodes. If we can find a least-weight Hamiltonian cycle on this augmented graph, we remove the dummy node to obtain a least-weight Hamiltonian path on the original graph.)

This project uses a fast approximate solver for the Travelling Salesman Problem to reconstruct the images quickly and perfectly.

You will notice that the image is flipped, but otherwise reconstructed perfectly. It is impossible in general to distinguish an image from its flipped version when the columns have been shuffled, and all the algorithms mentioned here produce flipped reconstructions half the time.

Apart from that, I believe this algorithm can correctly reconstruct all the images in Nayuki’s demo.

One interesting thing I found is that the result is sensitive to the dissimilarity measure used. I have used the same measure as the other projects mentioned here: the sum of the absolute values of the differences in the R/G/B channels, summed over all pixels in the column. If instead we use the square rather than the absolute value, the image is reconstructed incorrectly as follows:

This is not a failure of the TSP algorithm: in fact this mangled image has a better score than the original, using the sum-of-squares measure!

- Clone the repository

- Run

maketo download and shuffle the images, and download and compile LKH. - Now you can run

make reconstructto reconstruct the images from their shuffled versions using LKH.

You can also run make nayuki to reconstruct the images using Nayuki’s simulated annealing code.

You will need a working build environment (Make and a C compiler), and curl is used to download files from the web. You also need libpng >= 1.6 (which the Makefile assumes to be in /usr/local, but that is easy to change). The simpler bits of image manipulation are done using Python 2 and require the Python Imaging Library or a compatible fork such as Pillow.

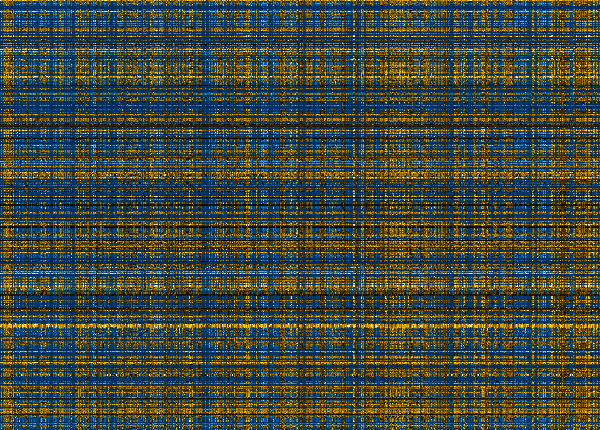

It is perhaps not surprising, but rather striking, that if we shuffle the columns and then the rows to obtain a really scrambled-looking image like this:

that it can nevertheless be reconstructed perfectly by applying the algorithm twice, first to the rows and then to the columns. Of course now the result may be flipped vertically as well as horizontally, but in this case I happened to get lucky twice and it came out in the same orientation as the original:

The code for the double-shuffling and reconstruction is in the branch double-shuffling. Switch to that branch and run make double_reconstruct to try it.